I like to gripe about our current conference/journal system, and how ungainly a method it is for publishing and disseminating quality work. Not surprisingly, other areas of computer science appear to have similar issues as well.

Hal Daume, my colleage at the U. of U., has sparked off a rather interesting discussion with his post on NLPers about the need for a new open-access journal for NLP research. The original motivation for his post is partly NLP-specific, but the evolving discussion over at his wiki provides a closeup view of the process of designing a new publication model that sits somewhere between traditional journals and traditional conference.

It's too early to tell what will come of this, but if their model gains some traction, it might provide a good exemplar for future reform in other disciplines in computer science.

Ruminations on computational geometry, algorithms, theoretical computer science and life

Monday, May 21, 2007

Creating a new publication model

Labels:

community,

publishing

Sunday, May 20, 2007

Numb3rs wins NSF award

I used to blog every episode of Numb3rs in its first season, and got bored of doing this soon after. However, I've continued to watch the show; I don't have the heart to avoid watching a show that involves mathematics (and more often than not, computer science). It's been surprisingly decent in its three years, as long as you're willing to concede all kinds of miraculous data mining feats performed in the span of a few hours.

However, I always wondered if it could make it on a mainstream network for very long. After all, ratings are king, and how long could such a tech-heavy series last ? After all, the crime procedural aspects of the show are hardly unique, and with the monster success of CSI, even more scientifically oriented crime series were clogging up the airwaves.

Thus, I was pleasantly surprised to hear that Numb3rs is now the "most-watched show" on Friday nights, clocking in at around 12 million viewers. It's been picked up for a fourth season, and ended season three with a dramatic moment, as well as interesting existential angst for the main characters. In fact, I suspect one of the reasons Numb3rs has done so well is that they've done a fairly realistic job of portraying some of tensions inherent in the life of the researcher (even if the mathematics itself, and the way the characters talk about it, is still somewhat cringeworthy).

The NSF (or technically, the NSB, the board that oversees the NSF) has recognized the success of Numb3rs as well. The show and its creaters were just given a public service award for

However, I always wondered if it could make it on a mainstream network for very long. After all, ratings are king, and how long could such a tech-heavy series last ? After all, the crime procedural aspects of the show are hardly unique, and with the monster success of CSI, even more scientifically oriented crime series were clogging up the airwaves.

Thus, I was pleasantly surprised to hear that Numb3rs is now the "most-watched show" on Friday nights, clocking in at around 12 million viewers. It's been picked up for a fourth season, and ended season three with a dramatic moment, as well as interesting existential angst for the main characters. In fact, I suspect one of the reasons Numb3rs has done so well is that they've done a fairly realistic job of portraying some of tensions inherent in the life of the researcher (even if the mathematics itself, and the way the characters talk about it, is still somewhat cringeworthy).

The NSF (or technically, the NSB, the board that oversees the NSF) has recognized the success of Numb3rs as well. The show and its creaters were just given a public service award for

...extraordinary contributions to increase public understanding of science. Recipients are chosen for their contributions to public service in areas such as: increasing the public's understanding of the scientific process and its communication; contributing to the development of broad science and engineering policy; promoting the engagement of scientists and engineers in public outreach; and fostering awareness of science and technology among broad segments of the population.

Friday, May 18, 2007

8th carnival of mathematics

It's back-to-school night at the carnival of mathematics. Time to revisit, reflect, and ponder on things we think we already know.

Estraven at Proving Theorems wishes that mathematicians today spent more time revisiting and bring up to date older, more inaccessible works of mathematics. This is discussed in the context of a re-publication of some of Grothendieck's early work on schemes.

There's a lovely quote at the end of the article, one that I cannot but help share:

Burnside's Lemma ultimately deals with counting symmetries: Ian Stewart has a new book out on the history of symmetry titled, "Why Beauty is Truth: A History of Symmetry". In a post at the Brittanica blogs, he describes why he decided to write this book.

In an ongoing series, John Amstrong continues his unapologetic look at coloring knots. I'd say any topic that has phrases like 'involuntary quandle' is worth studying. Of course, the original tying-yourself-in-knots proof was the diagonalization method of Cantor, which Mark Chu-Carroll is kind enough to explain to us, while he takes a timeout from beating errant creationists with sticks of logic. Mark notes that he's been going back to some old books on Set theory and is thoroughly enjoying them, another example of the theme of the day :)

Godel's incompletenes theorem is another of the tying-yourself-in-knots variety, and this year's Godel prize winner is a result in the same vein, showing why certain "natural proof structures" cannot be used to prove P != NP. Siva and Bill Gasarch have the scoop.

Walt at Ars Mathematica catches up to the Bulletin of the AMS, highlighting some nice expository articles that should make Estraven happy.

It's time to get educational now. Dave Marain helps high school math teachers everywhere with lesson plans for teaching quadratic systems and tangents without calculus. Mikael Johansson shows bravery far above and beyond the call of duty, explaining fundamental groupoids to a group of 9th graders. Heck, I don't even understand what a groupoid is, and I read John Baez all the time !

jd2718 shows his students how Fermat is connected to them. It would be remiss of me not to give a shout out to the Mathematics Genealogy Project. We've all heard the horror stories about the students who write "dy/dx = y/x"; Dan Greene at The Exponential Curve talks of students who write "log 5/log 2 = 5/2" and wonders if we need to change the notation for log to something more "mathematical".

I am delighted, and surprised, to see the quality of reports produced in the undergraduate

automata class that Leo Kontorovich is TAing. Read more about what his students did here. Andy Drucker weaves tales (tails?) of dogs eating steak in mineshafts, and cats climbing GMO-compromised trees, to explain some of the subtler details of computability theory.

We're almost ready to wrap up this edition of the carnival. Chew on some simple brainteasers that will excercise regions of your brain that you may not have known existed ! And review the history of calculating devices, with some speculation on what future calculators might look like.

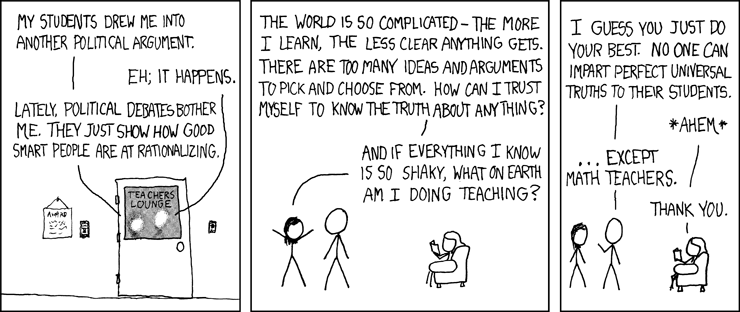

Finally, no carnival of mathematics would be complete without a cartoon by XKCD:

And that's all, folks ! Keep those posts coming. The next Carnival of Mathematics is in two weeks, hosted by JD2718.

Estraven at Proving Theorems wishes that mathematicians today spent more time revisiting and bring up to date older, more inaccessible works of mathematics. This is discussed in the context of a re-publication of some of Grothendieck's early work on schemes.

There's a lovely quote at the end of the article, one that I cannot but help share:

I could of course keep writing paper after paper. But there is so much beautiful mathematics out there that I don't know yet, and I want to read at least some of it before I dieI remember first sensing beauty when I first learnt Burnside's Lemma: Leadhyena Inrandomtan at Viviomancy has a detailed post on this simple, and yet elegant result in combinatorics.

Burnside's Lemma ultimately deals with counting symmetries: Ian Stewart has a new book out on the history of symmetry titled, "Why Beauty is Truth: A History of Symmetry". In a post at the Brittanica blogs, he describes why he decided to write this book.

In an ongoing series, John Amstrong continues his unapologetic look at coloring knots. I'd say any topic that has phrases like 'involuntary quandle' is worth studying. Of course, the original tying-yourself-in-knots proof was the diagonalization method of Cantor, which Mark Chu-Carroll is kind enough to explain to us, while he takes a timeout from beating errant creationists with sticks of logic. Mark notes that he's been going back to some old books on Set theory and is thoroughly enjoying them, another example of the theme of the day :)

Godel's incompletenes theorem is another of the tying-yourself-in-knots variety, and this year's Godel prize winner is a result in the same vein, showing why certain "natural proof structures" cannot be used to prove P != NP. Siva and Bill Gasarch have the scoop.

Walt at Ars Mathematica catches up to the Bulletin of the AMS, highlighting some nice expository articles that should make Estraven happy.

It's time to get educational now. Dave Marain helps high school math teachers everywhere with lesson plans for teaching quadratic systems and tangents without calculus. Mikael Johansson shows bravery far above and beyond the call of duty, explaining fundamental groupoids to a group of 9th graders. Heck, I don't even understand what a groupoid is, and I read John Baez all the time !

jd2718 shows his students how Fermat is connected to them. It would be remiss of me not to give a shout out to the Mathematics Genealogy Project. We've all heard the horror stories about the students who write "dy/dx = y/x"; Dan Greene at The Exponential Curve talks of students who write "log 5/log 2 = 5/2" and wonders if we need to change the notation for log to something more "mathematical".

I am delighted, and surprised, to see the quality of reports produced in the undergraduate

automata class that Leo Kontorovich is TAing. Read more about what his students did here. Andy Drucker weaves tales (tails?) of dogs eating steak in mineshafts, and cats climbing GMO-compromised trees, to explain some of the subtler details of computability theory.

We're almost ready to wrap up this edition of the carnival. Chew on some simple brainteasers that will excercise regions of your brain that you may not have known existed ! And review the history of calculating devices, with some speculation on what future calculators might look like.

Finally, no carnival of mathematics would be complete without a cartoon by XKCD:

And that's all, folks ! Keep those posts coming. The next Carnival of Mathematics is in two weeks, hosted by JD2718.

Tuesday, May 15, 2007

This year's Godel Prize

The 2007 Godel Prize goes to Alexander Razborov and Steven Rudich for their paper, "Natural Proofs" (JCSS Vol 55. No. 1, 1997).

I'd like to think that Godel himself would have approved of this particular award, given the mind-bending nature of the Razborov-Rudich result. To use Scott Aaronson's loose formulation, their result can be summarized as

To put it in perspective, the Razborov-Rudich result is a "meta-result": it says that no proofs of a certain kind (the so-called natural proofs) can be used to prove P != NP, unless other hardness assumptions break to begin with. Another example of a meta-result is the (older) result by Baker, Gill and Solovay that the P=NP question does not relativize (i.e cannot be proved using methods that hold under the application of oracles).

Razborov-Rudich really put a shaft in methods for separating P and NP (see this pessimistic post by Lance). If only all the P vs NP cranks actually tried examining proofs like this before wasting all those valuable bits on comp.theory ;)

(HT: [LB, UB] and Process Algebra Diary).

I'd like to think that Godel himself would have approved of this particular award, given the mind-bending nature of the Razborov-Rudich result. To use Scott Aaronson's loose formulation, their result can be summarized as

The reason P != NP is so difficult to prove is that P != NPComputational Complexity (is it the Lance-blog, or the Bill-blog, or a Lance-post in the Bill-blog, or a Lance-post in the Bill-blog-that-used-to-be-Lance's-blog?), and In Theory have detailed explanations of the RR result, and do a far better job than I can do here.

To put it in perspective, the Razborov-Rudich result is a "meta-result": it says that no proofs of a certain kind (the so-called natural proofs) can be used to prove P != NP, unless other hardness assumptions break to begin with. Another example of a meta-result is the (older) result by Baker, Gill and Solovay that the P=NP question does not relativize (i.e cannot be proved using methods that hold under the application of oracles).

Razborov-Rudich really put a shaft in methods for separating P and NP (see this pessimistic post by Lance). If only all the P vs NP cranks actually tried examining proofs like this before wasting all those valuable bits on comp.theory ;)

(HT: [LB, UB] and Process Algebra Diary).

Friday, May 11, 2007

Reminder: Deadline for the 8th Carnival of Mathematics in a week

Probabilistically checkable presentations

My ex-advisor, long time VC, and the M in ALMSS, Rajeev Motwani, will be one of the experts assisting to select 20 startups to fund as part of the TechCrunch20 conference.

Maybe he'll sample a few slides from each presentation....

Maybe he'll sample a few slides from each presentation....

Wednesday, May 09, 2007

Tracking changes in LaTeX

A rather long time ago, I had asked how to track changes in Emacs/LaTeX, along the lines of what Word can do. There were many suggestions at the time, along the lines of using CVS, and one person had even mentioned 'texdiff'.

I actually got around to trying latexdiff today, and it actually works quite well. Given an old and new LaTeX document, it will output a "diff" TeX file that when compiled marks changes in coded colors; blue for insertions, red for deletions. People I know use this all the time for managing changes with collaborators.

The program itself can be run as a standalone Perl script, so it's quite portable. I plan to use it more often.

I actually got around to trying latexdiff today, and it actually works quite well. Given an old and new LaTeX document, it will output a "diff" TeX file that when compiled marks changes in coded colors; blue for insertions, red for deletions. People I know use this all the time for managing changes with collaborators.

The program itself can be run as a standalone Perl script, so it's quite portable. I plan to use it more often.

Tuesday, May 08, 2007

Faure sequences and quasi-Monte Carlo methods

Fix a number n, and consider the following recursive procedure to construct a permutation $\sigma(n)$ of the numbers 0..n-1. We'll view the permutation as sequence, so the permutation (0 2 1) maps 0 to 0, 1 to 2 and 2 to 1. When convenient, we'll also treat the sequences as vectors, so we can do arithmetic on them.

For the backstory of where this sequence, known as a Faure sequence, comes from, read on...

One of the applications of geometric discrepancy is in what are called quasi-Monte Carlo methods. If you want to estimate the integral of some complicated function over a domain, (for simulation purposes for example), one way of doing this is to sample at random from the domain, and use the function evals at the sampled points to give an estimate of the integral. Typically, the function is expensive to evaluate as well, so you don't want to pick too many points.

Of course in practice, you're picking random points using a random number generator that is itself based on some pseudorandom generation process. An important area of study, called quasi-Monte Carlo methods, asks if this middleman can be eliminated. In other words, is it possible to generate a deterministic set of points that suffices to approximate the integration ? This is of course the question of finding a low discrepancy set, something that we've used in computational geometry to derandomized geometric algorithms (especially those based on $\epsilon$-net constructions).

There are many ways of constructing good sequences, and a good overview can be found in Chazelle's discrepancy book (read it free here). One of the more well known is due to Halton, and works by what is called radical inversion: given a base p, write down the numbers from 1 to n in base p, and then reverse the digits, and map back to a number less than one. For example, using a base of 2, we can write 6 as 110, which reversed becomes 011, or after adjoining a decimal point, 0.011 = 3/8. This specific sequence, using base 2, is called a van der Corput sequence and is also used to construct a Hammersley point set. For sampling in d-dimensional space, the trick is to let the jth coordinate of the ith point be the ith entry in a Halton sequence using some base $p_j$ (usually a prime).

It can be shown that these sequences have low discrepancy; however, they can have "aliasing" problems, or repeated patterns, if the primes are not large enough. One way around this problem is b observing that if we ignore the decimal point, all we're really doing is constructing a permutation of the numbers from 0 to $p^d-1$, (for a d-digit number in base p). Thus, if we could scramble the permutation further, this might allow us to preserve the discrepancy properties without the annoying aliasing effects. The process described above is a well known scramble called the Faure sequence. It maintains the desired properties of the sequence, and is quite popular in the quasi-MC community.

Note that if we were to preprocess all needed sequences, we'd need n*d space, where n is the number of samples, and d is the dimension of the space. This is not desirable for large dimensional sampling problems, and hence the question about the direct evaluation of coordinates.

- If n is even, then $\sigma(n) = s_1 \circ s_2$, where $s_1 = 2\sigma(n/2), s_2 = 2\sigma(n/2)+1$.

For example, $\sigma(2) = (0 1)$. $\sigma(4) = (0 2) \circ (1 3) = (0 2 1 3)$ - If n is odd, then $\sigma(n) = s_1 \circ (n-1)/2 \circ s_2$, where $s_1$ and $s_2$ are constructed by taking the sequence for $\sigma(n-1)$, incrementing all elements that are at least $(n-1)/2$, and then splitting the sequence into two parts of equal length.

For example, $\sigma(4) = (0 2 1 3)$. Let n = 5. Then incrementing all elements at least 2 gives us $(0 3 1 4)$. Splitting and inserting (2) gives us $\sigma(5) = (0 3 2 1 4)$

For the backstory of where this sequence, known as a Faure sequence, comes from, read on...

One of the applications of geometric discrepancy is in what are called quasi-Monte Carlo methods. If you want to estimate the integral of some complicated function over a domain, (for simulation purposes for example), one way of doing this is to sample at random from the domain, and use the function evals at the sampled points to give an estimate of the integral. Typically, the function is expensive to evaluate as well, so you don't want to pick too many points.

Of course in practice, you're picking random points using a random number generator that is itself based on some pseudorandom generation process. An important area of study, called quasi-Monte Carlo methods, asks if this middleman can be eliminated. In other words, is it possible to generate a deterministic set of points that suffices to approximate the integration ? This is of course the question of finding a low discrepancy set, something that we've used in computational geometry to derandomized geometric algorithms (especially those based on $\epsilon$-net constructions).

There are many ways of constructing good sequences, and a good overview can be found in Chazelle's discrepancy book (read it free here). One of the more well known is due to Halton, and works by what is called radical inversion: given a base p, write down the numbers from 1 to n in base p, and then reverse the digits, and map back to a number less than one. For example, using a base of 2, we can write 6 as 110, which reversed becomes 011, or after adjoining a decimal point, 0.011 = 3/8. This specific sequence, using base 2, is called a van der Corput sequence and is also used to construct a Hammersley point set. For sampling in d-dimensional space, the trick is to let the jth coordinate of the ith point be the ith entry in a Halton sequence using some base $p_j$ (usually a prime).

It can be shown that these sequences have low discrepancy; however, they can have "aliasing" problems, or repeated patterns, if the primes are not large enough. One way around this problem is b observing that if we ignore the decimal point, all we're really doing is constructing a permutation of the numbers from 0 to $p^d-1$, (for a d-digit number in base p). Thus, if we could scramble the permutation further, this might allow us to preserve the discrepancy properties without the annoying aliasing effects. The process described above is a well known scramble called the Faure sequence. It maintains the desired properties of the sequence, and is quite popular in the quasi-MC community.

Note that if we were to preprocess all needed sequences, we'd need n*d space, where n is the number of samples, and d is the dimension of the space. This is not desirable for large dimensional sampling problems, and hence the question about the direct evaluation of coordinates.

Friday, May 04, 2007

Silvio Micali elected to the NAS

The National Academy of Sciences just announced 72 new members, among them Silvio Micali from MIT. For those who may not know, he's famous for his work in pseudorandomness, cryptography, and interactive proof systems. Among his many awards are the Godel Prize in 1993 and being named a Fellow of the AAAS in 2003.

The 7th Carnival of Mathematics is out

Read all about it.

I'll be hosting the next one, so if you have a submission you'd like to be publicized, send it to sureshv REMOVE at THIS gmail AND dot THIS com. Or, you can submit it at the master blog carnival site.

I'll be hosting the next one, so if you have a submission you'd like to be publicized, send it to sureshv REMOVE at THIS gmail AND dot THIS com. Or, you can submit it at the master blog carnival site.

Thursday, May 03, 2007

Hazardous Jobs #523: Dean of Undergraduate Studies

From Bob Sloan's rumination on the joys of being an NSF program manager:

When I left for NSF, I was Director of Undergraduate Studies for a department that had well over 600 majors at the height of the dot com boom. The previous two holders of that position had only ended their time in it by leaving the country in one case and dying in the other—both far more extreme than going to NSF for a couple of years

The joy of AMS Notices.

For the past few months, I've been reading articles from the Notices of the AMS, something I never did earlier. This is a direct consequence of reading In Theory and Ars Mathematica, both of which will regularly highlight articles from the latest issue of the Notices.

I'm amazed at how many of the articles I find interesting enough to peruse, or even print out to read offline. Most of these are technical articles, on specific topics in mathematics. Quite often, there will be discussions on topics tangentially related to theoryCS or even things that I'm interested in. For example, the May issue of the Notices has an article titled, "How to generate random matrices from the classical compact groups". Anyone who messes around in geometry and graphics will immediately see the connection to the standard problem of generating a random rotation, and in fact there's a very interesting explanation of the group theory underlying the standard procedure for generating a random orthogonal matrix.

A second article with the whimsical title, "If Euclid had been Japanese" discusses the constructibility of points using folding operations rather than "Euclidean" constructions. Apart from the obvious origami connections, this is particularly interesting to me because I've been experimenting with a short presentation that tries to explain algorithms via origami (folds are like steps in a program, etc..).

I could go on like this. Every issue has at least one article that is both acecssible and interesting to me, and I'm not even a mathematician ! Why can't we have such a delightful magazine for computer science, or even for theoryCS ?

SIGACT News does a valiant job. And it's hard work to manage a newsletter, along with all one's other activities. But it comes out far too rarely, and one doesn't often find the kind of short vignettes that entertain and illuminate at the same time. I enjoy the algorithms column and the geometry column. I will make valiant attempts to read the complexity column, but it's often too "structural complexity" for me. But in a way I am unable to articulate, I find SIGACT News somehow less stimulating than the Notices. I wonder if others share this view as well ?

Update (5/3/07): I think I just had an epiphany. The above post is the equivalent of my standing in front of the Model T, wondering why my horse-buggy can't go any faster. In other words, we already have excellent publications that write expository surveys and cute vignettes that connect the community together. They're called blogs !!

I'm amazed at how many of the articles I find interesting enough to peruse, or even print out to read offline. Most of these are technical articles, on specific topics in mathematics. Quite often, there will be discussions on topics tangentially related to theoryCS or even things that I'm interested in. For example, the May issue of the Notices has an article titled, "How to generate random matrices from the classical compact groups". Anyone who messes around in geometry and graphics will immediately see the connection to the standard problem of generating a random rotation, and in fact there's a very interesting explanation of the group theory underlying the standard procedure for generating a random orthogonal matrix.

A second article with the whimsical title, "If Euclid had been Japanese" discusses the constructibility of points using folding operations rather than "Euclidean" constructions. Apart from the obvious origami connections, this is particularly interesting to me because I've been experimenting with a short presentation that tries to explain algorithms via origami (folds are like steps in a program, etc..).

I could go on like this. Every issue has at least one article that is both acecssible and interesting to me, and I'm not even a mathematician ! Why can't we have such a delightful magazine for computer science, or even for theoryCS ?

SIGACT News does a valiant job. And it's hard work to manage a newsletter, along with all one's other activities. But it comes out far too rarely, and one doesn't often find the kind of short vignettes that entertain and illuminate at the same time. I enjoy the algorithms column and the geometry column. I will make valiant attempts to read the complexity column, but it's often too "structural complexity" for me. But in a way I am unable to articulate, I find SIGACT News somehow less stimulating than the Notices. I wonder if others share this view as well ?

Update (5/3/07): I think I just had an epiphany. The above post is the equivalent of my standing in front of the Model T, wondering why my horse-buggy can't go any faster. In other words, we already have excellent publications that write expository surveys and cute vignettes that connect the community together. They're called blogs !!

Wednesday, May 02, 2007

MAD about ALGOs

The new BRICS Center for Massive Data Algorithmics (MADALGO) is kicking off with a summer school on data streams. It's a 4-day affair between Aug 20-23, in Aarhus, Denmark, and I am told on good authority that along with learning the ins and outs of stream algorithms, you will also take a mandatory course on how to open one beer bottle with another one.

[Side note: you might ask a Danish person, "What do I do if I have only one beer bottle?". Danish Person: < long silence >]

The set of topics are just what you'd expect for a data stream course:

And when you're done streaming, you can go to Legoland, or experience the rather nonstandard (and NSFW) techniques the Danes employ to slow traffic down (NSFW).

[Side note: you might ask a Danish person, "What do I do if I have only one beer bottle?". Danish Person: < long silence >]

The set of topics are just what you'd expect for a data stream course:

- Algorithms for metric and geometric data streams

- Randomized sketching and compressed sensing

- Histograms, norms and other statistics of data streams

- Algorithms for ordered data

- Lower bounds and communication complexity

And when you're done streaming, you can go to Legoland, or experience the rather nonstandard (and NSFW) techniques the Danes employ to slow traffic down (NSFW).

Subscribe to:

Comments (Atom)