I'm at JPL today and tomorrow, visiting a colleague (and hopefully collaborator) at NASA, and attending the first day of the AIRS Science Meeting.

AIRS stands for the Atmospheric Infrared Sensor, an instrument that sits aboard NASA's Aqua satellite. Along with other sensors onboard Aqua, AIRS provides for detailed atmospheric sensing, especially of greenhouse gases that account for climate change.

In fact, the AIRS science meeting is ground central for some of the climate change work that we mostly hear about through a political lens. It's fascinating to sit in the JPL cafeteria and listen to atmosphere scientists talk about some of the fundamental problems in climate science (clouds are apparently a BIG mystery in terms of how they influence global temperature).

So what's a computer scientist doing in this super-charged atmosphere ? The instrument produces gargantuan amounts of data, and there are all kinds of interesting data analysis questions that need to be addressed at scale. What makes these questions interesting is that unlike in much of mining, there is a physical ground truth that we can use to validate any interesting patterns that a mining process might uncover.

Ruminations on computational geometry, algorithms, theoretical computer science and life

Tuesday, March 27, 2007

Visiting JPL

Sunday, March 25, 2007

An ending...

They say, "all good things come to an end". What they don't say is, "if good things didn't end, you wouldn't realize how good they were".

Lance announces today that he's shutting down the Computational Complexity blog. As far as I know, he's the ur-TCS blogger, and was the direct inspiration for my starting the Geomblog. I've always had the Complexity blog on my must-read list, and have felt both pressured (to write better posts) and inspired (by the rich content) every time I've seen a new posting.

Another aspect of Lance's blog that I envy is the rich commenter community: he was able to create a forum for discussion of TheoryCS, on matters both technical and non-technical, that will be hard to replace.

So thanks for all the posts, Lance, and hopefully you'll be back one day !

Lance announces today that he's shutting down the Computational Complexity blog. As far as I know, he's the ur-TCS blogger, and was the direct inspiration for my starting the Geomblog. I've always had the Complexity blog on my must-read list, and have felt both pressured (to write better posts) and inspired (by the rich content) every time I've seen a new posting.

Another aspect of Lance's blog that I envy is the rich commenter community: he was able to create a forum for discussion of TheoryCS, on matters both technical and non-technical, that will be hard to replace.

So thanks for all the posts, Lance, and hopefully you'll be back one day !

4th Carnival of mathematics up

Wednesday, March 21, 2007

Job announcements

For people who don't subscribe to compgeom-announce:

- [Apr 30 deadline] One-year postdoc in the area of computational geometry and topology, @ GIPSA laboratory, CNRS - UMR

- [Apr 30 deadline] Ph.D position in INRIA in computational geometry, specifically on triangulations for non-standard geometric spaces.

Meta-posts

I was browsing my referrer logs and found a callback to Lance's blog. In the comments for the post, I see this comment (emphasis mine):

;)

Here's one that I've found very vexing: Let A(d) denote the least surface area of a cell that tiles R^d via the translations in Z^d. Improve on the following bounds:It's a meta post !!! And by the way, there's no such thing as "JUST a problem in geometry". Harumph !!

sqrt(pi e / 2) sqrt(d) <= A(d)/2 <= d - exp(-Omega(d log d)).

(It's a little more natural to consider A(d)/2 than A(d).)

The lower bound is by considering a volume-1 sphere, the upper bound by monkeying around a little with a cube's corner.

Any proof of A(d)/2 >= 10sqrt(d) or A(d)/2 <= d/10 would be very interesting.

For motivation: Either pretend I posted on Suresh's blog (so it's just a problem in geometry); or -- it's related to finding the best rate for parallel repetition of a simple family of 2P1R games.

;)

Tuesday, March 20, 2007

On ranking journals

Via Cosma Shalizi comes a new way of ranking journals developed by Jevin West, Ben Althouse, Martin Rosvall, Ted Bergstrom, and Carl Bergstrom. It works on the PageRank principle: take a random paper from a journal and do a random walk on the citations, using the stationary distribution to rank journals. The result is eigenfactor.org, a website that catalogues many journals and other publications, and provides both a ranking by their "pagerank" but also a "value for money" that might be useful when deciding which journals to purchase for a library.

Impact measurement for journals has become a popular parlor game, as well as impact factors like the 'h-index' for individual researchers. There are all kinds of problems with these measurements in general, and Eigenfactor does provide a way of eliminating some of the usual problems with measuring impact across multiple communities with different citation mechanisms, community sizes, and so on.

Eigenfactor has a few top 10 lists for different areas (science, social science, etc): here's my informal list of top ranked computer science algorithms (and friends) journals, ranked by article impact factor (all scores are percentiles over the universe of 6000+ journals considered):

* The ACM Transactions on Algorithms, which absorbed most of the editorial board of J. Alg, is too new to show up. This ranking should probably reflect the historical relevance of J. Alg as well as its current state.

Impact measurement for journals has become a popular parlor game, as well as impact factors like the 'h-index' for individual researchers. There are all kinds of problems with these measurements in general, and Eigenfactor does provide a way of eliminating some of the usual problems with measuring impact across multiple communities with different citation mechanisms, community sizes, and so on.

Eigenfactor has a few top 10 lists for different areas (science, social science, etc): here's my informal list of top ranked computer science algorithms (and friends) journals, ranked by article impact factor (all scores are percentiles over the universe of 6000+ journals considered):

- SIAM Review: 98.35

- J. ACM: 97.91

- IEEE Trans. Inf. Theory: 95.05

- Machine Learning: 93.92

- SICOMP: 93.04

- JCSS: 90.93

- Journal of Algorithms: 90.31*

- DCG: 85.29

- CGTA: 81.96

- Algorithmica: 79.13

* The ACM Transactions on Algorithms, which absorbed most of the editorial board of J. Alg, is too new to show up. This ranking should probably reflect the historical relevance of J. Alg as well as its current state.

Monday, March 19, 2007

John Backus, 1924-2007

John Backus died on Saturday. He was the father of FORTRAN, Turing Award winner, and one of the names behind the Backus-Naur form (Peter Naur, 2005 Turing Award winner, was the other).

No matter how much you might make fun of FORTRAN today, the fact is that it remains the language of choice for many scientific computing packages. Even if you're writing in C, you're probably interfacing with routines first written in FORTRAN.

His Turing Award lecture is also a remarkable read. Titled, "Can programming be liberated from the Von Neumann style", it laid the groundwork for functional programming, and anticipated many of the stream programming paradigms that we see today, from GPU programs to Google's MapReduce system. I'm always humbled when I read articles from so many years ago that have such resonance today; I can only hope that anything that I do can still have value even ten years later.

No matter how much you might make fun of FORTRAN today, the fact is that it remains the language of choice for many scientific computing packages. Even if you're writing in C, you're probably interfacing with routines first written in FORTRAN.

His Turing Award lecture is also a remarkable read. Titled, "Can programming be liberated from the Von Neumann style", it laid the groundwork for functional programming, and anticipated many of the stream programming paradigms that we see today, from GPU programs to Google's MapReduce system. I'm always humbled when I read articles from so many years ago that have such resonance today; I can only hope that anything that I do can still have value even ten years later.

Saturday, March 17, 2007

Anonymizing PDF

File this under 'late-night LaTeX griping':

Is there any way of stripping metadata from a PDF file ? I'm writing a referee report for a journal, and used PDFLaTeX to create the report. When I scan it in acroread, there's all kinds of meta data that could identify me.

Now pdftk is a useful package that can strip out some of the simple metadata like 'creator'. However, pdftex adds "Advanced" fields, and one of them is the full pathname of the original LaTeX file. If your filesystem (UNIX) is anything like mine, then a part of that pathname is the /<username>/ section, which in many instances is an almost unique identifier. This also happens with dvipdfm, which uses the gs backend to create the PDF file, and with ps2pdf. pdftk cannot strip out these fields, because it doesn't appear to see them.

I suspect that if I owned a copy of the very-not-free Acrobat, I could meddle around with this metadata. Obviously I could submit the review as a Postscript file, but in general I prefer to maintain PDF. Further this problem also occurs if I want to do due diligence when submitting to conferences with double blind review, and sometimes I don't have the option to use PS.

Is there any way of stripping metadata from a PDF file ? I'm writing a referee report for a journal, and used PDFLaTeX to create the report. When I scan it in acroread, there's all kinds of meta data that could identify me.

Now pdftk is a useful package that can strip out some of the simple metadata like 'creator'. However, pdftex adds "Advanced" fields, and one of them is the full pathname of the original LaTeX file. If your filesystem (UNIX) is anything like mine, then a part of that pathname is the /<username>/ section, which in many instances is an almost unique identifier. This also happens with dvipdfm, which uses the gs backend to create the PDF file, and with ps2pdf. pdftk cannot strip out these fields, because it doesn't appear to see them.

I suspect that if I owned a copy of the very-not-free Acrobat, I could meddle around with this metadata. Obviously I could submit the review as a Postscript file, but in general I prefer to maintain PDF. Further this problem also occurs if I want to do due diligence when submitting to conferences with double blind review, and sometimes I don't have the option to use PS.

Thursday, March 15, 2007

Dr. Karp and The TCS Lens

or How I learned to stop worrying and love complexity.

[ed. note: This note was prompted by Aaron Clauset's series of articles titled 'Unreasonable effectiveness' (the title itself a riff on Eugene Wigner's famous essay). Further inspiration came from reading the various summaries of Karp's 'algorithmic lens' idea.]

I believe in the gospel of St. Bernard. We are about to enter the age of the algorithm. The only question is: what kind of algorithm will it be ?

We're all familiar with the steps. Define the problem. Design an algorithm. Analyze the algorithm. Rinse and repeat, till we have a matching lower bound, or till we hit an even harder problem. Algorithms are designed from the top down, by a coffee-swilling researcher with a whiteboard, with the elegance of a swiss watch, or the clumsiness of a sledgehammer. Some of them are so beautiful they make you weep. Some are algorithms "in name only"; no one in their right minds would ever dream of implementing one of these beasts.

The algorithmic lens flips this on its head: complexity (and the solution) emerges from a set of simple rules. It's like Spore; set up the rules, start the game, and see what happens. The ingenuity here is not in the construction of a solution, but in the design of the rules. It's declarative, not procedural. It's bottom up, not top down. It's distributed, not centralized.

It has the flavor of (computational) complexity: I'll give you a resource; a guess, a random coin, a scratch pad, an AskJeeves clone. You tell me what I can do with how little. But it's still a lens. Why ? Things we're familiar with show up as distributed, bottom up, emergent constructions: A Voronoi diagram is a battle between equally (or unequally) matched forces. A Steiner tree is a soap bubble diagram. A clustering algorithm is a local "I'll go to my closest friend"; a regular language is the consequence of amnesiac entities bouncing around between states.

It's unsettling: we aren't the masters of our domain any more. We're not GOD, intelligently designing beautiful watches. We're just the rule-makers for a complex system. It's like being the parent of a young child !

What we can do is this: find out which rules yield cause what solutions to emerge. We study social networks, and watch complexity emerge from a set of simple pairwise interactions. In other words, the algorithm IS the social network. You can't get much more Web 2.0 than that...

If you've heard this before, it's because you have: things like the Ising model, phase transitions, statistical mechanics, Conway's Game of Life, Stephen Wolfram's New Kind of Science. So what makes these concepts so exciting now ?

Maybe it's all about scale. We preach that immense scale requires theoretical analysis; the N0 in the "for all n > N0" of O() notation is finally here. But maybe linear time algorithms, streaming algorithms, and small space sketching are all soon-to-be-futile attempts to stave off the inevitable horrible failure of programs to manage the complexity of the data we deal with. Suddenly, local algorithms that evolve continuing solutions look quite appealing, as well adaptive.

It's an exciting time to do algorithms, whether you're designing watches, or setting up an ecosystem.

[ed. note: This note was prompted by Aaron Clauset's series of articles titled 'Unreasonable effectiveness' (the title itself a riff on Eugene Wigner's famous essay). Further inspiration came from reading the various summaries of Karp's 'algorithmic lens' idea.]

I believe in the gospel of St. Bernard. We are about to enter the age of the algorithm. The only question is: what kind of algorithm will it be ?

We're all familiar with the steps. Define the problem. Design an algorithm. Analyze the algorithm. Rinse and repeat, till we have a matching lower bound, or till we hit an even harder problem. Algorithms are designed from the top down, by a coffee-swilling researcher with a whiteboard, with the elegance of a swiss watch, or the clumsiness of a sledgehammer. Some of them are so beautiful they make you weep. Some are algorithms "in name only"; no one in their right minds would ever dream of implementing one of these beasts.

The algorithmic lens flips this on its head: complexity (and the solution) emerges from a set of simple rules. It's like Spore; set up the rules, start the game, and see what happens. The ingenuity here is not in the construction of a solution, but in the design of the rules. It's declarative, not procedural. It's bottom up, not top down. It's distributed, not centralized.

It has the flavor of (computational) complexity: I'll give you a resource; a guess, a random coin, a scratch pad, an AskJeeves clone. You tell me what I can do with how little. But it's still a lens. Why ? Things we're familiar with show up as distributed, bottom up, emergent constructions: A Voronoi diagram is a battle between equally (or unequally) matched forces. A Steiner tree is a soap bubble diagram. A clustering algorithm is a local "I'll go to my closest friend"; a regular language is the consequence of amnesiac entities bouncing around between states.

It's unsettling: we aren't the masters of our domain any more. We're not GOD, intelligently designing beautiful watches. We're just the rule-makers for a complex system. It's like being the parent of a young child !

What we can do is this: find out which rules yield cause what solutions to emerge. We study social networks, and watch complexity emerge from a set of simple pairwise interactions. In other words, the algorithm IS the social network. You can't get much more Web 2.0 than that...

If you've heard this before, it's because you have: things like the Ising model, phase transitions, statistical mechanics, Conway's Game of Life, Stephen Wolfram's New Kind of Science. So what makes these concepts so exciting now ?

Maybe it's all about scale. We preach that immense scale requires theoretical analysis; the N0 in the "for all n > N0" of O() notation is finally here. But maybe linear time algorithms, streaming algorithms, and small space sketching are all soon-to-be-futile attempts to stave off the inevitable horrible failure of programs to manage the complexity of the data we deal with. Suddenly, local algorithms that evolve continuing solutions look quite appealing, as well adaptive.

It's an exciting time to do algorithms, whether you're designing watches, or setting up an ecosystem.

Wednesday, March 14, 2007

A movie about a font is my kind of movie

One of the curses of making web pages and writing documents is an unhealthy obsession with fonts. People who've written papers with me have heard my paens to the Euler math font with barely suppressed irritation, and I often have to stop myself from attempting to call out names of familiar fonts when walking down the street :).

Sounds like this is the movie for me:

Sounds like this is the movie for me:

Helvetica, which had its world premiere at the conference, presents the life story of something all of us encounter on a daily (or even hourly) basis. Created in 1957 by the Swiss modernist designer Max Miedinger as a response to the cluttered typography and design of the postwar era, Helvetica's clean neutrality and balanced use of the empty space surrounding letters quickly made it a go-to font for public signage, advertising, corporate logos and works of modernist design around the world

[...]

Filmmaker Gary Hustwitt revels in his fascination with something so commonplace that it blends almost entirely into a context-less background, becoming a detective of sorts to unveil the myriad everyday places Helvetica is hiding (“It's a disease,” Hustwitt said of his obsessive font-spotting).

Tuesday, March 13, 2007

And he's OOOUUUTTT !!!!

Yep, the world cup is now finally on. After a long time, I'm in the same time zone, which is not as nice as it might seem, given that all matches are during the workday. I was trying to explain this to my wife, and she said, 'Aren't cricket matches always at 3 am ?'. Considering that the last time she saw cricket was when I dragged her out to see the India-Aus final 4 years ago, she has a point :).

As people who watch cricket in the US know, Kelly Broadcasting has had a strangehold over cricket rights in North America for some time now. This year however, there are many more options for watching cricket. You can continue to watch the WC on dishTV, but now DirecTV has moved into the biz with a $200 package for the entire cup. If that's too pricey for your taste (and you can't spend all day at home watching on TV!), then sgstream.com has a streaming package for $75, which to my amazement works quite well (so far; let's see what happens for the first India game).

Right now, WI is 140-3 after 36 overs against Pakistan.

As people who watch cricket in the US know, Kelly Broadcasting has had a strangehold over cricket rights in North America for some time now. This year however, there are many more options for watching cricket. You can continue to watch the WC on dishTV, but now DirecTV has moved into the biz with a $200 package for the entire cup. If that's too pricey for your taste (and you can't spend all day at home watching on TV!), then sgstream.com has a streaming package for $75, which to my amazement works quite well (so far; let's see what happens for the first India game).

Right now, WI is 140-3 after 36 overs against Pakistan.

Monday, March 12, 2007

Order polytopes

One view of the techniques of computational (and combinatorial) geometry is the delicate tension between the discrete (combinatorial) and the continuous (geometric). Whether it be Davenport-Schinzel sequences and lower envelopes, or VC-dimension and intersections of geometric sets, a common step in making geometric structures tractable is finding a combinatorial structure that captures the aspect of the geometry that's important for a particular problem.

It's nice to return the favor sometimes. Combinatorics and topology have strong connections, and one doesn't have to go too far from home find instances of "lifting" a combinatorial problem to a topological domain to solve it; Lovasz's proof of the Kneser conjecture, and the various methods for proving evasiveness properties, are among the more well known examples. In fact, distributed computing is replete with topological hammers for proving properties of protocols.

Of course, one can view the entirety of linear programming as an example of lifting combinatorics to geometry; I'm deliberately ignoring it because you have to go through the integer lattice to get combinatorial problems, rather than having geometry characterizing the structure directly. This is of course totally ad hoc :)

What I describe next is an extremely elegant geometric view of a purely combinatorial problem. Suppose you're sorting numbers, and you have some rough idea of the sorted order. Maybe you can rank some of the elements, but not all of them (for example, maybe you're integrating results from different web searches). At any rate, what you have is a partial order, and you'd like to get a sense of its 'orderedness'. Specifically, you'd like to estimate the number of different ways this partial order can be completed (by inserting orderings between as-yet unordered elements without disturbing existing order). Such a completion (or extension) is called a linear extension, because you can think of the final total order as being points on a line, numbered from lowest to highest rank.

For example, suppose you have the four elements (a,b,c,d) and you know that (a < b) and (c < d). There are then six ways of constructing a linear extension from this partial order.

Suppose now that comparisons were costly, and we'd like to choose the next comparison so as to reduce the number of potential linear extensions by as much as possible. If we could guarantee that the number reduced by an α fraction, then we could complete the sorting using logα E comparisons, where E was the number of linear extensions to begin with. Since each comparison partitions the set of linear extensions into two sets, clearly this fraction must be at least 1-α.

It's not hard to see that we can't do better than α = 2/3, by considering the partial order {(a < b), c}. There's always a way of picking the answer to the next comparison to make this so. The best known bound to date is roughly α = 0.7236, and this bound (and earlier methods) make use of an elegant geometric characterization of linear extensions via order polytopes.

Given n elements in the partial order, let the order polytope be the set of points in [0,1]n such that the coordinates of each point satisfies the partial order. Namely, if there's a constraint of the form a1 < a2, then the coordinates x1 and x2 of the point x satisfy the same constraint.

It's not hard to see that this is indeed a polytope; it's the intersection of a set of hyperplanes, and is bounded. What is more intriguing is that every simplex of this polytope corresponds to a specific linear extension. If we look for example at the simplex defined by the four blue points, the coordinates are (0,0,0), (0,1,1), (0,1,0), and (1,1,1), which describes the order (a < c < b). All these simplices are congruent and non overlapping, and each has volume 1/n!. This implies the remarkable result that the number of linear extensions for a given partial order is the volume of the resulting order polytope, divided by n!.

If we look for example at the simplex defined by the four blue points, the coordinates are (0,0,0), (0,1,1), (0,1,0), and (1,1,1), which describes the order (a < c < b). All these simplices are congruent and non overlapping, and each has volume 1/n!. This implies the remarkable result that the number of linear extensions for a given partial order is the volume of the resulting order polytope, divided by n!.

The favor gets returned as well. The paper, "Counting linear extensions is #P-complete", by Brightwell and Winkler in 1991, yields the corollary that computing the volume of a polyhedron is #P-complete.

For more on this, Matousek's Lectures on Discrete Geometry is a wonderfully readable source.

It's nice to return the favor sometimes. Combinatorics and topology have strong connections, and one doesn't have to go too far from home find instances of "lifting" a combinatorial problem to a topological domain to solve it; Lovasz's proof of the Kneser conjecture, and the various methods for proving evasiveness properties, are among the more well known examples. In fact, distributed computing is replete with topological hammers for proving properties of protocols.

Of course, one can view the entirety of linear programming as an example of lifting combinatorics to geometry; I'm deliberately ignoring it because you have to go through the integer lattice to get combinatorial problems, rather than having geometry characterizing the structure directly. This is of course totally ad hoc :)

What I describe next is an extremely elegant geometric view of a purely combinatorial problem. Suppose you're sorting numbers, and you have some rough idea of the sorted order. Maybe you can rank some of the elements, but not all of them (for example, maybe you're integrating results from different web searches). At any rate, what you have is a partial order, and you'd like to get a sense of its 'orderedness'. Specifically, you'd like to estimate the number of different ways this partial order can be completed (by inserting orderings between as-yet unordered elements without disturbing existing order). Such a completion (or extension) is called a linear extension, because you can think of the final total order as being points on a line, numbered from lowest to highest rank.

For example, suppose you have the four elements (a,b,c,d) and you know that (a < b) and (c < d). There are then six ways of constructing a linear extension from this partial order.

Suppose now that comparisons were costly, and we'd like to choose the next comparison so as to reduce the number of potential linear extensions by as much as possible. If we could guarantee that the number reduced by an α fraction, then we could complete the sorting using logα E comparisons, where E was the number of linear extensions to begin with. Since each comparison partitions the set of linear extensions into two sets, clearly this fraction must be at least 1-α.

It's not hard to see that we can't do better than α = 2/3, by considering the partial order {(a < b), c}

Given n elements in the partial order, let the order polytope be the set of points in [0,1]n such that the coordinates of each point satisfies the partial order. Namely, if there's a constraint of the form a1 < a2, then the coordinates x1 and x2 of the point x satisfy the same constraint.

It's not hard to see that this is indeed a polytope; it's the intersection of a set of hyperplanes, and is bounded. What is more intriguing is that every simplex of this polytope corresponds to a specific linear extension.

If we look for example at the simplex defined by the four blue points, the coordinates are (0,0,0), (0,1,1), (0,1,0), and (1,1,1), which describes the order (a < c < b). All these simplices are congruent and non overlapping, and each has volume 1/n!. This implies the remarkable result that the number of linear extensions for a given partial order is the volume of the resulting order polytope, divided by n!.

If we look for example at the simplex defined by the four blue points, the coordinates are (0,0,0), (0,1,1), (0,1,0), and (1,1,1), which describes the order (a < c < b). All these simplices are congruent and non overlapping, and each has volume 1/n!. This implies the remarkable result that the number of linear extensions for a given partial order is the volume of the resulting order polytope, divided by n!. The favor gets returned as well. The paper, "Counting linear extensions is #P-complete", by Brightwell and Winkler in 1991, yields the corollary that computing the volume of a polyhedron is #P-complete.

For more on this, Matousek's Lectures on Discrete Geometry is a wonderfully readable source.

Wednesday, March 07, 2007

Computer Scientist:Programming::Mathematician:Arithmetic

One of the things that continues to exasperate me on a regular basis is the conflation of computer science with programming. Consider two recent gems (emphasis mine):

As Lance mentions, the closure of stores like CompUSA is a harbinger of the end of computer science as "television science". The more familiar people get with computers, the more they treat them as appliances rather than as complex devices worthy of worship.

What does this mean ? You aren't going to attract people to a field by saying, "Lookee here! here's a neat television ! Let me show you how to build one. It's FUN!!!!". First of all, people ain't stupid. Secondly, there's a lot more to computer science than programming.

Thankfully, we do see here and there the signs of a manifesto for computer science that doesn't involve actually programming a computer: From Jeanette Wing's CACM article:

p.s Chazelle is quickly becoming the poet-laureate for 21st century computer science: check out the table of contents for his course titled, "What do your DNA and your iPod have in common ?"

- From a press release for Microsoft TechFest 2007:

Boku, a virtual robot in a simulated world, debuted as a research project to teach kids basic programming skills in a fun and entertaining way. “There is an ongoing and deepening crisis in computer science,” Rashid said. “Our goal is to stem the tide by showing young kids the magic of software programming.”

- From an article on changing perceptions of computer science at college and K-12 level:

East Allen County Schools is working to make sure students are exposed to computer careers, whether they think they might be interested or not. All students are required to take a computer course before graduating, and those who know they are interested can take in-depth courses, including training on Cisco computer networks...

As Lance mentions, the closure of stores like CompUSA is a harbinger of the end of computer science as "television science". The more familiar people get with computers, the more they treat them as appliances rather than as complex devices worthy of worship.

What does this mean ? You aren't going to attract people to a field by saying, "Lookee here! here's a neat television ! Let me show you how to build one. It's FUN!!!!". First of all, people ain't stupid. Secondly, there's a lot more to computer science than programming.

Thankfully, we do see here and there the signs of a manifesto for computer science that doesn't involve actually programming a computer: From Jeanette Wing's CACM article:

Computer science is the study of computation: what can be computed and how to compute it.Amen to that. And notice how different it sounds to the version you might get from the random person on the street:

Computer science is the study of computers.If I had to preach the gospel of computer-science-as-computation, I'd probably riff off three things:

- The above mentioned CACM article. Message: Computer science is the study of algorithms and abstractions.

- Bernard Chazelle's IPod essay. Message: Algorithms are the language of modern science

- Scott Aaronson's 'Logicians on Safari' article. Message: Computer science is quantitative epistemology.

p.s Chazelle is quickly becoming the poet-laureate for 21st century computer science: check out the table of contents for his course titled, "What do your DNA and your iPod have in common ?"

Simulacrums all the way down...

You can already meet politicians, autograph books, have all kinds of kinky virtual sex, and complain about virtual denizens who spend their time in their virtual houses playing virtual video games. And now you can even get pregnant, virtually speaking (this from the creater of Fable, an Xbox RPG):

“There is the appreciation the wide world feels toward your character as he lives and fights in their world. There is the ability to make love and make babies. Yes, you can be both a man or a woman and if you're a woman, you can get pregnant. A first, he believes, for a main character in an RPG.”

(HT: Penny-arcade)

Friday, March 02, 2007

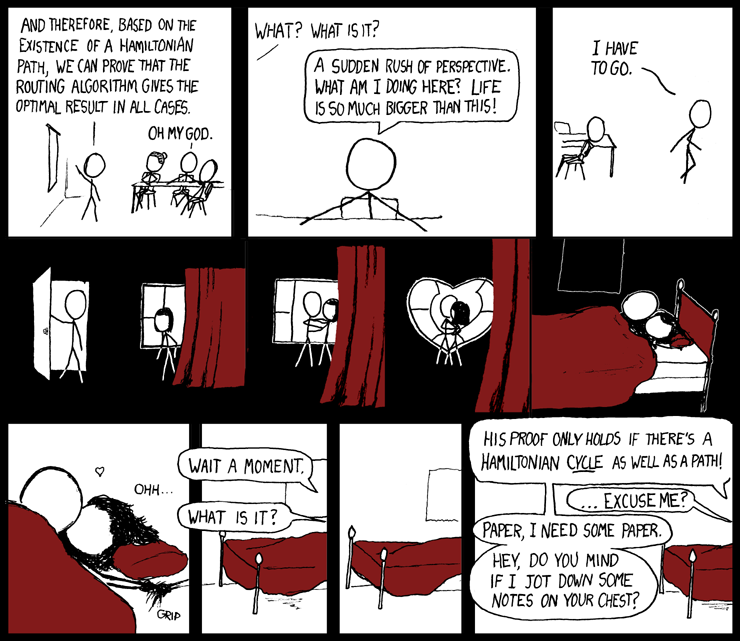

So so true....

Subscribe to:

Posts (Atom)