Mihai Pătraşcu, cause of numerous flame wars on the complexity blog, as well as lonely campaigner for the rights of esoteric data structures everywhere, has a blog, self-billed as 'Terry Tao without the Fields Medal'.

Enjoy !

Ruminations on computational geometry, algorithms, theoretical computer science and life

Tuesday, July 31, 2007

New TheoryCS Blog Alert !

Thursday, July 26, 2007

A counting gem

Consider the following puzzle:

Update: A technical point: the problem is a promise problem, in that you are promised that such an element exists. Or, in the non-promise interpretation, you are not required to return anything reliable if the input does not contain a majority element.

Given 2n items, determine whether a majority element (one occuring n+1 times) exists. You are allowed one pass over the data (which means you can read the elements in sequence, and that's it), and exactly TWO units of working storage.The solution is incredibly elegant, and dates back to the early 80s. I'll post the answer in the comments tomorrow, if it hasn't been posted already.

Update: A technical point: the problem is a promise problem, in that you are promised that such an element exists. Or, in the non-promise interpretation, you are not required to return anything reliable if the input does not contain a majority element.

Tuesday, July 24, 2007

STOC/FOCS/SODA: The Cage Match (with data!)

(Ed: This post, and the attendant web page and data, was initiated as a joint effort of Piotr Indyk and myself. Many others have helped improve the data, presentation and conclusions.)

Inspired by Michael Mitzenmacher'sflamebait post on SODA/STOC/FOCS, we decided to roll up our sleeves, and resolve the biggest outstanding issue in Theoretical Computer Science, namely the great "STOC/FOCS vs. SODA" debate ("P vs. NP" is a tainted second, sullied by all that money being offered to solve it). We have some interesting preliminary observations, and there are many interesting problems left open by our work ;)

The hypothesis:

First, we obtained the list of titles of conference papers appearing in STOC, FOCS and SODA in the last 10 years (1997-2006). We deliberately excluded 2007 because FOCS hasn't happened yet. We got this list from DBLP (Note: DBLP does not make any distinction between actual papers and tutorials/invited articles; we decided to keep all titles because there weren't that many tutorials/invited papers in any case).

For each title, we extracted the citation count from Google Scholar, using a process that we henceforth refer to as "The Extractor". Life is too short to describe what "The Extractor" is. Suffices to say that its output, although not perfect, has been verified to be somewhat close to the true distribution (see below).

The results, and methodology, can be found at this link. The tables and graphs are quite self-explanatory. All source data used to generate the statistics are also available; you are welcome to download the data and make your own inferences. We'll be happy to post new results here and at the webpage.

OBSERVATIONS:

The main conclusion is that the hypothesis is valid: a systematic discrepancy between citation counts of SODA vs. STOC/FOCS does appear to exist. However, the discrepancy varies significantly over time, with years 1999-2001 experiencing the highest variations. It is interesting to note that 1999 was the the year when SODA introduced four parallel sessions as well as the short paper option.

Although most of the stats for STOC and FOCS are quite similar, there appears to be a discrepancy at the end of the tail. Specifically, the 5 highest citation counts per year for STOC (years 1997-2001) are all higher than the highest citation count for FOCS (year 2001). (Note: the highest cited STOC article in 2001 was Christos Papadimitriou's tutorial paper on algorithms and game theory). The variation between SODA and STOC/FOCS in the 1999-2001 range shows up here too, between STOC and FOCS themselves. So maybe it's just something weird going on these years. Who knows :)

Another interesting observation comes from separating the SODA cites into long and short paper groups (for the period 1999-2005). Plotting citations for short vs long papers separately indicates that the presence of short papers caused a net downward influence on SODA citation counts, but as fewer and fewer shorts were accepted, this influence decreased.

There are other observations we might make, especially in regard to what happens outside the peak citations, but for that we need more reliable data. Which brings us to the next point.

VALIDATION OF THE DATA:

To make sure that the output makes sense, we performed a few "checks and balances". In particular:

A CALL FOR HELP:

Warning: the data displayed here is KNOWN to contain errors (our estimate is that around 10% of citation counts are incorrect). We would very much appreciate any efforts to reduce the error rate. If you would like to help:

(and yes, we know that this algorithm has halting problems). Many thanks in advance. Of course, feel free to send us individual corrections as well.

Citation guidelines: The world of automatic citation engines is obviously quite messy, and sometimes it is not immediately clear what is the "right" citation count of a paper. The common "difficult case" is when you see several (e.g., conference and journal) versions of the same paper. In this case, our suggestion is that you ADD all the citation counts that you see, and send us the links to ALL the papers that you accounted for.

Acknowledgement: Adam Buchsbaum for suggesting the idea of, and generating data for the short-long variants of SODA. David Johnson, Graham Cormode, and Sudipto Guha for bug fixes, useful suggestions and ideas for further plots (which we can look into after the data is cleaned up)

Inspired by Michael Mitzenmacher's

The hypothesis:

There is a significant difference in citation patterns between STOC/FOCS and SODAThe plan:

First, we obtained the list of titles of conference papers appearing in STOC, FOCS and SODA in the last 10 years (1997-2006). We deliberately excluded 2007 because FOCS hasn't happened yet. We got this list from DBLP (Note: DBLP does not make any distinction between actual papers and tutorials/invited articles; we decided to keep all titles because there weren't that many tutorials/invited papers in any case).

For each title, we extracted the citation count from Google Scholar, using a process that we henceforth refer to as "The Extractor". Life is too short to describe what "The Extractor" is. Suffices to say that its output, although not perfect, has been verified to be somewhat close to the true distribution (see below).

The results, and methodology, can be found at this link. The tables and graphs are quite self-explanatory. All source data used to generate the statistics are also available; you are welcome to download the data and make your own inferences. We'll be happy to post new results here and at the webpage.

OBSERVATIONS:

The main conclusion is that the hypothesis is valid: a systematic discrepancy between citation counts of SODA vs. STOC/FOCS does appear to exist. However, the discrepancy varies significantly over time, with years 1999-2001 experiencing the highest variations. It is interesting to note that 1999 was the the year when SODA introduced four parallel sessions as well as the short paper option.

Although most of the stats for STOC and FOCS are quite similar, there appears to be a discrepancy at the end of the tail. Specifically, the 5 highest citation counts per year for STOC (years 1997-2001) are all higher than the highest citation count for FOCS (year 2001). (Note: the highest cited STOC article in 2001 was Christos Papadimitriou's tutorial paper on algorithms and game theory). The variation between SODA and STOC/FOCS in the 1999-2001 range shows up here too, between STOC and FOCS themselves. So maybe it's just something weird going on these years. Who knows :)

Another interesting observation comes from separating the SODA cites into long and short paper groups (for the period 1999-2005). Plotting citations for short vs long papers separately indicates that the presence of short papers caused a net downward influence on SODA citation counts, but as fewer and fewer shorts were accepted, this influence decreased.

There are other observations we might make, especially in regard to what happens outside the peak citations, but for that we need more reliable data. Which brings us to the next point.

VALIDATION OF THE DATA:

To make sure that the output makes sense, we performed a few "checks and balances". In particular:

- we sampled 10 random titles from each of FOCS, STOC and SODA for each of the 10 years, and for each title we checked the citation count by hand. Results: there were 7 mistakes in FOCS, 9 in STOC, and 11 in SODA, indicating a current error rate in the 9-10% range.

- for each of FOCS, STOC, SODA, we verified (by hand) the values of the top 10 citation numbers, as reported by The Extractor

- we compared our stats for the year 2000 with the stats obtained by Michael. The results are pretty close:

| Median (Mike's/Ours) | Total | |

|---|---|---|

| FOCS | 38/38 | 3551/3315 |

| STOC | 21/21 | 3393/2975 |

| SODA | 14/13 | 2578/2520 |

A CALL FOR HELP:

Warning: the data displayed here is KNOWN to contain errors (our estimate is that around 10% of citation counts are incorrect). We would very much appreciate any efforts to reduce the error rate. If you would like to help:

- choose a "random" conference/year pair (e.g., STOC 1997)

- check if this pair has been already claimed in this blog; if yes, go to (1)

- post a short message claiming your pair (e.g., "CLAIMING STOC 1997") on the blog.

- follow the links to check the citations. For each incorrect citation, provide two lines: (1) paper title (2) a Google Scholar link to the correct paper

- Email the file to Suresh Venkatasubramanian.

Tuesday, July 17, 2007

Theoretician, comedy writer, sailboat racer, ...

Since Bill brought up Jeff Westbrook (yes, people, I have co-written a paper with a Simpsons screenwriter, thank you very much), I thought that I should mention that he's now, along with everything else, a sailboat racer !

He's racing in a race called Transpac, from Long Beach, CA to Honolulu, HI. His boat is called the Peregrine, and it has a 4-man crew. You can track their position (they were leading when I last checked) at http://www.transpacificyc.org/ (go to "track charts," then click the center logo. when the page loads, hit boat selector, pick division 6, then hit boat selector again, then wait). You can also visit the boat blog (bblog?) at http://peregrine-transpac.blogspot.com/.

One should note that Jeff, being the considerate soul that he is, signed off on revisions to pending papers before he left.

(Source: Adam Buchsbaum)

He's racing in a race called Transpac, from Long Beach, CA to Honolulu, HI. His boat is called the Peregrine, and it has a 4-man crew. You can track their position (they were leading when I last checked) at http://www.transpacificyc.org/ (go to "track charts," then click the center logo. when the page loads, hit boat selector, pick division 6, then hit boat selector again, then wait). You can also visit the boat blog (bblog?) at http://peregrine-transpac.blogspot.com/.

One should note that Jeff, being the considerate soul that he is, signed off on revisions to pending papers before he left.

(Source: Adam Buchsbaum)

Labels:

community,

miscellaneous

SODA vs STOC/FOCS

Michael Mitzenmacher has an interesting post up comparing citation counts for papers at SODA/STOC/FOCS 2000. The results are quite stunning (and SODA does not fare well). For a better comparison, we'd need more data of course, but this is a reasonable starting point.

As my (minor) contribution to this endeavour, here are the paper titles from 1997-2006 (last 10 years) for STOC, FOCS, and SODA. There might be errors: this was all done using this script. Someone more proficient in Ruby than I might try to hack this neat script written by Mark Reid for stemming and plotting keywords trends in ICML papers; something I've been hoping to do for STOC/FOCS/SODA papers.

If there are any Google researchers reading this, is there some relatively painless way using the Google API to return citation counts from Google Scholar ? In fact, if people were even willing to do blocks of 10 papers each, we might harness the distributed power of the community to get the citation counts ! If you're so inspired, email me stating which block of 10 (by line number, starting from 1) you're planning to do and for which conference. Don't let Michael's effort go in vain !

There are 681 titles from FOCS, 821 from STOC, and 1076 from SODA.

As my (minor) contribution to this endeavour, here are the paper titles from 1997-2006 (last 10 years) for STOC, FOCS, and SODA. There might be errors: this was all done using this script. Someone more proficient in Ruby than I might try to hack this neat script written by Mark Reid for stemming and plotting keywords trends in ICML papers; something I've been hoping to do for STOC/FOCS/SODA papers.

If there are any Google researchers reading this, is there some relatively painless way using the Google API to return citation counts from Google Scholar ? In fact, if people were even willing to do blocks of 10 papers each, we might harness the distributed power of the community to get the citation counts ! If you're so inspired, email me stating which block of 10 (by line number, starting from 1) you're planning to do and for which conference. Don't let Michael's effort go in vain !

There are 681 titles from FOCS, 821 from STOC, and 1076 from SODA.

Sunday, July 08, 2007

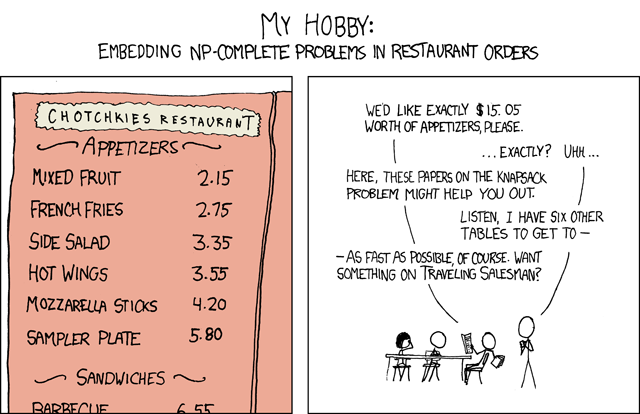

As usual, Randall Munro is a genius

Friday, July 06, 2007

NSF RSS Feeds

The NSF now has RSS feeds for important sections of their website. I don't know how new this is, but it's great. Some of the useful feeds:

The NSF publications link has two new items that might be of interest. The first one is about a change in formatting for proposals. If I read it correctly, it now states that Times font cannot be used for writing proposals, rather Computer Modern (>= 10pt) is the preferred font.

The second document is a statistical overview of immigrant scientists and engineers in the US. Lots of interesting statistics to muse over, including this: fully 15% of all immigrant scientists and engineers in the US are Indian-born, followed by nearly 10% Chinese-born. Compare this to 19% for all of Europe.

The NSF publications link has two new items that might be of interest. The first one is about a change in formatting for proposals. If I read it correctly, it now states that Times font cannot be used for writing proposals, rather Computer Modern (>= 10pt) is the preferred font.

a. Use of only the approved typefaces identified below, a black fontIt's a little confusing why Windows and Mac are of the same "type" as TeX.

color, and a font size of 10 points or larger must be used:

. For Windows users: Arial, Helvetica, Palatino Linotype, or Georgia

. For Macintosh users: Arial, Helvetica, Palatino, or Georgia

. For TeX users: Computer Modern

A Symbol font may be used to insert Greek letters or special

characters; however, the font size requirement still applies;

The second document is a statistical overview of immigrant scientists and engineers in the US. Lots of interesting statistics to muse over, including this: fully 15% of all immigrant scientists and engineers in the US are Indian-born, followed by nearly 10% Chinese-born. Compare this to 19% for all of Europe.

SODA 2008 Server Issues ?

Piotr asks if anyone else has been having problems with the SODA 2008 server shortly before 5pm ET (i.e around 90 minutes ago).

Update (7/707): At least one person thinks the server was on (and accepting submissions) for a few hours after the deadline. If this is true, this would be quite interesting, considering that I've never heard of this happening before (if the server did crash, it's a rational response of course).

P.S Maybe the server was out watching fireworks.

Update (7/707): At least one person thinks the server was on (and accepting submissions) for a few hours after the deadline. If this is true, this would be quite interesting, considering that I've never heard of this happening before (if the server did crash, it's a rational response of course).

P.S Maybe the server was out watching fireworks.

Thursday, July 05, 2007

Here's your algorithmic lens

In studies of breast cancer cells, [senior investigator Dr. Bert] O'Malley and his colleagues showed how the clock works. Using steroid receptor coactivator-3 (SRC-3), they demonstrated that activation requires addition of a phosphate molecule to the protein at one spot and addition of an ubiquitin molecule at another point. Each time the message of the gene is transcribed into a protein, another ubiquitin molecule is chained on. Five ubiquitins in the chain and the protein is automatically destroyed.A counter on a separate work tape: neat !

Main article at sciencedaily; link via Science and Reason.

Labels:

miscellaneous,

outreach

Wednesday, July 04, 2007

FOCS 07 list out

The list is here. As I was instructed by my source, let the annual "roasting of the PC members" begin !

63 papers were accepted, and on a first look there appears to be a nice mix of topics: it doesn't seem as if any one area stands out. Not many papers are online though, from my cursory random sample, so any informed commenting on the papers will have to wait. People who know more about any of the papers are free to comment (even if you're the author!). Does anyone know the number of submissions this year ? I heard it was quite high.

63 papers were accepted, and on a first look there appears to be a nice mix of topics: it doesn't seem as if any one area stands out. Not many papers are online though, from my cursory random sample, so any informed commenting on the papers will have to wait. People who know more about any of the papers are free to comment (even if you're the author!). Does anyone know the number of submissions this year ? I heard it was quite high.

What's an advanced algorithm ?

And he's back !!!

After a rather refreshing (if overly hot) vacation in India, I'm back in town, forced to face reality, an impending move, and the beginning of semester. Yep, the summer is drawing to a close, and I haven't even stuffed my face with hot dogs yet, let alone 59.5 of them (is there something slightly deranged about ESPN covering "competitive eating" as a sport?).

Anyhow, I digress. The burning question of the day is this:

Some explanation is in order. At most schools, undergrads in CS take some kind of algorithms class, which for the sake of convenience we'll call the CLRS class (which is not to say that it can't be a KT class, or a DPV class, or a GT class, or....). At any rate, this is the first substantial algorithms class that the students take, and often it's the last one.

Now when you get into grad school, you often have to complete some kind of algorithms breadth requirement. In some schools, you can do this via an exam, and in others, you have to take a class. Namely, a graduate algorithms class. Note also that some students taking this class might not have taken an undergrad algorithms class (for example if they were math/physics majors)

What does one teach in such a class ? There are a number of conflicting goals here:

All of this elides the most important point though: what constitutes advanced material ? I've seen graduate students struggle with NP-hardness, but this is covered in almost any undergraduate algorithms class (or should). What about general technique classes like approximation algorithms and randomization, both of which merit classes in their own right and can only be superficially covered in any algorithms class (the same is true for geometry) ? Advanced data structures can span an entire course, and some of them are quite entertaining. Topics like FFTs, number-theoretic algorithms, (string) pattern matching, and flows also seem to fit into the purview of advanced topics, though they don't cohere as well with each other.

It seems like an advanced algorithms class (or the advanced portion of a graduate algorithms class) is a strange and ill-defined beast, defined at the mercy of the instructor (i.e ME !). This problem occurs in computational geometry as well; to use graph-theoretic parlance, the tree of topics fans out very quickly after a short path from the root, rather than having a long and well-defined trunk.

After a rather refreshing (if overly hot) vacation in India, I'm back in town, forced to face reality, an impending move, and the beginning of semester. Yep, the summer is drawing to a close, and I haven't even stuffed my face with hot dogs yet, let alone 59.5 of them (is there something slightly deranged about ESPN covering "competitive eating" as a sport?).

Anyhow, I digress. The burning question of the day is this:

What is an advanced algorithm ?It might not be your burning question of the day, but it certainly is mine, because I am the proud teacher of the graduate algorithms class this fall.

Some explanation is in order. At most schools, undergrads in CS take some kind of algorithms class, which for the sake of convenience we'll call the CLRS class (which is not to say that it can't be a KT class, or a DPV class, or a GT class, or....). At any rate, this is the first substantial algorithms class that the students take, and often it's the last one.

Now when you get into grad school, you often have to complete some kind of algorithms breadth requirement. In some schools, you can do this via an exam, and in others, you have to take a class. Namely, a graduate algorithms class. Note also that some students taking this class might not have taken an undergrad algorithms class (for example if they were math/physics majors)

What does one teach in such a class ? There are a number of conflicting goals here:

- Advertising: attract new students to work with you via the class. Obviously, you'd like to test them out first a little (this is not the only way of doing this)

- Pedagogy: there's lots of things that someone might need even if they don't do algorithms research: topics like approximations and randomization are not usually well covered in an intro algorithms class

- Retaining mindshare: you don't want to teach graduate algorithms as a CLRS class because you'll bore all the people who took such a class. On the other hand, you zoom too far ahead, and you lose the people who come to CS from different backgrounds. And the Lord knows we're desperate nowadays for people to come to CS from different backgrounds (more on this in a later post).

- Teach to the top: dive straight into advanced topics; anyone who needs to catch up does so on their own time. This is probably the most appealing solution from a lecturer perspective, because it covers more interesting topics. It's also most likely to excite the few students who care. Be prepared for bumpy student evals, especially from disgruntled folks who just need a grade so that they can forget all about algorithms for the rest of their Ph.D

- Teach to the bottom: Do a CLRS-redux, with maybe a few advanced topics thrown in at the end (NP-hardness might count as advanced). You'll bore the good ones, but you'll bring the entire class along.

All of this elides the most important point though: what constitutes advanced material ? I've seen graduate students struggle with NP-hardness, but this is covered in almost any undergraduate algorithms class (or should). What about general technique classes like approximation algorithms and randomization, both of which merit classes in their own right and can only be superficially covered in any algorithms class (the same is true for geometry) ? Advanced data structures can span an entire course, and some of them are quite entertaining. Topics like FFTs, number-theoretic algorithms, (string) pattern matching, and flows also seem to fit into the purview of advanced topics, though they don't cohere as well with each other.

It seems like an advanced algorithms class (or the advanced portion of a graduate algorithms class) is a strange and ill-defined beast, defined at the mercy of the instructor (i.e ME !). This problem occurs in computational geometry as well; to use graph-theoretic parlance, the tree of topics fans out very quickly after a short path from the root, rather than having a long and well-defined trunk.

Subscribe to:

Comments (Atom)