Mihai Pătraşcu, cause of numerous flame wars on the complexity blog, as well as lonely campaigner for the rights of esoteric data structures everywhere, has a blog, self-billed as 'Terry Tao without the Fields Medal'.

Enjoy !

Ruminations on computational geometry, algorithms, theoretical computer science and life

Tuesday, July 31, 2007

New TheoryCS Blog Alert !

Thursday, July 26, 2007

A counting gem

Consider the following puzzle:

Update: A technical point: the problem is a promise problem, in that you are promised that such an element exists. Or, in the non-promise interpretation, you are not required to return anything reliable if the input does not contain a majority element.

Given 2n items, determine whether a majority element (one occuring n+1 times) exists. You are allowed one pass over the data (which means you can read the elements in sequence, and that's it), and exactly TWO units of working storage.The solution is incredibly elegant, and dates back to the early 80s. I'll post the answer in the comments tomorrow, if it hasn't been posted already.

Update: A technical point: the problem is a promise problem, in that you are promised that such an element exists. Or, in the non-promise interpretation, you are not required to return anything reliable if the input does not contain a majority element.

Tuesday, July 24, 2007

STOC/FOCS/SODA: The Cage Match (with data!)

(Ed: This post, and the attendant web page and data, was initiated as a joint effort of Piotr Indyk and myself. Many others have helped improve the data, presentation and conclusions.)

Inspired by Michael Mitzenmacher'sflamebait post on SODA/STOC/FOCS, we decided to roll up our sleeves, and resolve the biggest outstanding issue in Theoretical Computer Science, namely the great "STOC/FOCS vs. SODA" debate ("P vs. NP" is a tainted second, sullied by all that money being offered to solve it). We have some interesting preliminary observations, and there are many interesting problems left open by our work ;)

The hypothesis:

First, we obtained the list of titles of conference papers appearing in STOC, FOCS and SODA in the last 10 years (1997-2006). We deliberately excluded 2007 because FOCS hasn't happened yet. We got this list from DBLP (Note: DBLP does not make any distinction between actual papers and tutorials/invited articles; we decided to keep all titles because there weren't that many tutorials/invited papers in any case).

For each title, we extracted the citation count from Google Scholar, using a process that we henceforth refer to as "The Extractor". Life is too short to describe what "The Extractor" is. Suffices to say that its output, although not perfect, has been verified to be somewhat close to the true distribution (see below).

The results, and methodology, can be found at this link. The tables and graphs are quite self-explanatory. All source data used to generate the statistics are also available; you are welcome to download the data and make your own inferences. We'll be happy to post new results here and at the webpage.

OBSERVATIONS:

The main conclusion is that the hypothesis is valid: a systematic discrepancy between citation counts of SODA vs. STOC/FOCS does appear to exist. However, the discrepancy varies significantly over time, with years 1999-2001 experiencing the highest variations. It is interesting to note that 1999 was the the year when SODA introduced four parallel sessions as well as the short paper option.

Although most of the stats for STOC and FOCS are quite similar, there appears to be a discrepancy at the end of the tail. Specifically, the 5 highest citation counts per year for STOC (years 1997-2001) are all higher than the highest citation count for FOCS (year 2001). (Note: the highest cited STOC article in 2001 was Christos Papadimitriou's tutorial paper on algorithms and game theory). The variation between SODA and STOC/FOCS in the 1999-2001 range shows up here too, between STOC and FOCS themselves. So maybe it's just something weird going on these years. Who knows :)

Another interesting observation comes from separating the SODA cites into long and short paper groups (for the period 1999-2005). Plotting citations for short vs long papers separately indicates that the presence of short papers caused a net downward influence on SODA citation counts, but as fewer and fewer shorts were accepted, this influence decreased.

There are other observations we might make, especially in regard to what happens outside the peak citations, but for that we need more reliable data. Which brings us to the next point.

VALIDATION OF THE DATA:

To make sure that the output makes sense, we performed a few "checks and balances". In particular:

A CALL FOR HELP:

Warning: the data displayed here is KNOWN to contain errors (our estimate is that around 10% of citation counts are incorrect). We would very much appreciate any efforts to reduce the error rate. If you would like to help:

(and yes, we know that this algorithm has halting problems). Many thanks in advance. Of course, feel free to send us individual corrections as well.

Citation guidelines: The world of automatic citation engines is obviously quite messy, and sometimes it is not immediately clear what is the "right" citation count of a paper. The common "difficult case" is when you see several (e.g., conference and journal) versions of the same paper. In this case, our suggestion is that you ADD all the citation counts that you see, and send us the links to ALL the papers that you accounted for.

Acknowledgement: Adam Buchsbaum for suggesting the idea of, and generating data for the short-long variants of SODA. David Johnson, Graham Cormode, and Sudipto Guha for bug fixes, useful suggestions and ideas for further plots (which we can look into after the data is cleaned up)

Inspired by Michael Mitzenmacher's

The hypothesis:

There is a significant difference in citation patterns between STOC/FOCS and SODAThe plan:

First, we obtained the list of titles of conference papers appearing in STOC, FOCS and SODA in the last 10 years (1997-2006). We deliberately excluded 2007 because FOCS hasn't happened yet. We got this list from DBLP (Note: DBLP does not make any distinction between actual papers and tutorials/invited articles; we decided to keep all titles because there weren't that many tutorials/invited papers in any case).

For each title, we extracted the citation count from Google Scholar, using a process that we henceforth refer to as "The Extractor". Life is too short to describe what "The Extractor" is. Suffices to say that its output, although not perfect, has been verified to be somewhat close to the true distribution (see below).

The results, and methodology, can be found at this link. The tables and graphs are quite self-explanatory. All source data used to generate the statistics are also available; you are welcome to download the data and make your own inferences. We'll be happy to post new results here and at the webpage.

OBSERVATIONS:

The main conclusion is that the hypothesis is valid: a systematic discrepancy between citation counts of SODA vs. STOC/FOCS does appear to exist. However, the discrepancy varies significantly over time, with years 1999-2001 experiencing the highest variations. It is interesting to note that 1999 was the the year when SODA introduced four parallel sessions as well as the short paper option.

Although most of the stats for STOC and FOCS are quite similar, there appears to be a discrepancy at the end of the tail. Specifically, the 5 highest citation counts per year for STOC (years 1997-2001) are all higher than the highest citation count for FOCS (year 2001). (Note: the highest cited STOC article in 2001 was Christos Papadimitriou's tutorial paper on algorithms and game theory). The variation between SODA and STOC/FOCS in the 1999-2001 range shows up here too, between STOC and FOCS themselves. So maybe it's just something weird going on these years. Who knows :)

Another interesting observation comes from separating the SODA cites into long and short paper groups (for the period 1999-2005). Plotting citations for short vs long papers separately indicates that the presence of short papers caused a net downward influence on SODA citation counts, but as fewer and fewer shorts were accepted, this influence decreased.

There are other observations we might make, especially in regard to what happens outside the peak citations, but for that we need more reliable data. Which brings us to the next point.

VALIDATION OF THE DATA:

To make sure that the output makes sense, we performed a few "checks and balances". In particular:

- we sampled 10 random titles from each of FOCS, STOC and SODA for each of the 10 years, and for each title we checked the citation count by hand. Results: there were 7 mistakes in FOCS, 9 in STOC, and 11 in SODA, indicating a current error rate in the 9-10% range.

- for each of FOCS, STOC, SODA, we verified (by hand) the values of the top 10 citation numbers, as reported by The Extractor

- we compared our stats for the year 2000 with the stats obtained by Michael. The results are pretty close:

| Median (Mike's/Ours) | Total | |

|---|---|---|

| FOCS | 38/38 | 3551/3315 |

| STOC | 21/21 | 3393/2975 |

| SODA | 14/13 | 2578/2520 |

A CALL FOR HELP:

Warning: the data displayed here is KNOWN to contain errors (our estimate is that around 10% of citation counts are incorrect). We would very much appreciate any efforts to reduce the error rate. If you would like to help:

- choose a "random" conference/year pair (e.g., STOC 1997)

- check if this pair has been already claimed in this blog; if yes, go to (1)

- post a short message claiming your pair (e.g., "CLAIMING STOC 1997") on the blog.

- follow the links to check the citations. For each incorrect citation, provide two lines: (1) paper title (2) a Google Scholar link to the correct paper

- Email the file to Suresh Venkatasubramanian.

Tuesday, July 17, 2007

Theoretician, comedy writer, sailboat racer, ...

Since Bill brought up Jeff Westbrook (yes, people, I have co-written a paper with a Simpsons screenwriter, thank you very much), I thought that I should mention that he's now, along with everything else, a sailboat racer !

He's racing in a race called Transpac, from Long Beach, CA to Honolulu, HI. His boat is called the Peregrine, and it has a 4-man crew. You can track their position (they were leading when I last checked) at http://www.transpacificyc.org/ (go to "track charts," then click the center logo. when the page loads, hit boat selector, pick division 6, then hit boat selector again, then wait). You can also visit the boat blog (bblog?) at http://peregrine-transpac.blogspot.com/.

One should note that Jeff, being the considerate soul that he is, signed off on revisions to pending papers before he left.

(Source: Adam Buchsbaum)

He's racing in a race called Transpac, from Long Beach, CA to Honolulu, HI. His boat is called the Peregrine, and it has a 4-man crew. You can track their position (they were leading when I last checked) at http://www.transpacificyc.org/ (go to "track charts," then click the center logo. when the page loads, hit boat selector, pick division 6, then hit boat selector again, then wait). You can also visit the boat blog (bblog?) at http://peregrine-transpac.blogspot.com/.

One should note that Jeff, being the considerate soul that he is, signed off on revisions to pending papers before he left.

(Source: Adam Buchsbaum)

Labels:

community,

miscellaneous

SODA vs STOC/FOCS

Michael Mitzenmacher has an interesting post up comparing citation counts for papers at SODA/STOC/FOCS 2000. The results are quite stunning (and SODA does not fare well). For a better comparison, we'd need more data of course, but this is a reasonable starting point.

As my (minor) contribution to this endeavour, here are the paper titles from 1997-2006 (last 10 years) for STOC, FOCS, and SODA. There might be errors: this was all done using this script. Someone more proficient in Ruby than I might try to hack this neat script written by Mark Reid for stemming and plotting keywords trends in ICML papers; something I've been hoping to do for STOC/FOCS/SODA papers.

If there are any Google researchers reading this, is there some relatively painless way using the Google API to return citation counts from Google Scholar ? In fact, if people were even willing to do blocks of 10 papers each, we might harness the distributed power of the community to get the citation counts ! If you're so inspired, email me stating which block of 10 (by line number, starting from 1) you're planning to do and for which conference. Don't let Michael's effort go in vain !

There are 681 titles from FOCS, 821 from STOC, and 1076 from SODA.

As my (minor) contribution to this endeavour, here are the paper titles from 1997-2006 (last 10 years) for STOC, FOCS, and SODA. There might be errors: this was all done using this script. Someone more proficient in Ruby than I might try to hack this neat script written by Mark Reid for stemming and plotting keywords trends in ICML papers; something I've been hoping to do for STOC/FOCS/SODA papers.

If there are any Google researchers reading this, is there some relatively painless way using the Google API to return citation counts from Google Scholar ? In fact, if people were even willing to do blocks of 10 papers each, we might harness the distributed power of the community to get the citation counts ! If you're so inspired, email me stating which block of 10 (by line number, starting from 1) you're planning to do and for which conference. Don't let Michael's effort go in vain !

There are 681 titles from FOCS, 821 from STOC, and 1076 from SODA.

Sunday, July 08, 2007

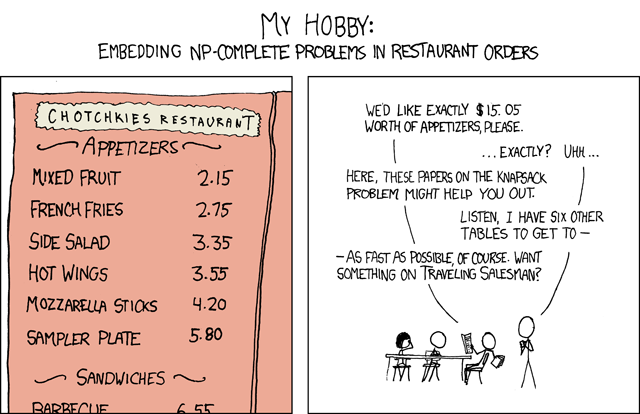

As usual, Randall Munro is a genius

Friday, July 06, 2007

NSF RSS Feeds

The NSF now has RSS feeds for important sections of their website. I don't know how new this is, but it's great. Some of the useful feeds:

The NSF publications link has two new items that might be of interest. The first one is about a change in formatting for proposals. If I read it correctly, it now states that Times font cannot be used for writing proposals, rather Computer Modern (>= 10pt) is the preferred font.

The second document is a statistical overview of immigrant scientists and engineers in the US. Lots of interesting statistics to muse over, including this: fully 15% of all immigrant scientists and engineers in the US are Indian-born, followed by nearly 10% Chinese-born. Compare this to 19% for all of Europe.

The NSF publications link has two new items that might be of interest. The first one is about a change in formatting for proposals. If I read it correctly, it now states that Times font cannot be used for writing proposals, rather Computer Modern (>= 10pt) is the preferred font.

a. Use of only the approved typefaces identified below, a black fontIt's a little confusing why Windows and Mac are of the same "type" as TeX.

color, and a font size of 10 points or larger must be used:

. For Windows users: Arial, Helvetica, Palatino Linotype, or Georgia

. For Macintosh users: Arial, Helvetica, Palatino, or Georgia

. For TeX users: Computer Modern

A Symbol font may be used to insert Greek letters or special

characters; however, the font size requirement still applies;

The second document is a statistical overview of immigrant scientists and engineers in the US. Lots of interesting statistics to muse over, including this: fully 15% of all immigrant scientists and engineers in the US are Indian-born, followed by nearly 10% Chinese-born. Compare this to 19% for all of Europe.

SODA 2008 Server Issues ?

Piotr asks if anyone else has been having problems with the SODA 2008 server shortly before 5pm ET (i.e around 90 minutes ago).

Update (7/707): At least one person thinks the server was on (and accepting submissions) for a few hours after the deadline. If this is true, this would be quite interesting, considering that I've never heard of this happening before (if the server did crash, it's a rational response of course).

P.S Maybe the server was out watching fireworks.

Update (7/707): At least one person thinks the server was on (and accepting submissions) for a few hours after the deadline. If this is true, this would be quite interesting, considering that I've never heard of this happening before (if the server did crash, it's a rational response of course).

P.S Maybe the server was out watching fireworks.

Thursday, July 05, 2007

Here's your algorithmic lens

In studies of breast cancer cells, [senior investigator Dr. Bert] O'Malley and his colleagues showed how the clock works. Using steroid receptor coactivator-3 (SRC-3), they demonstrated that activation requires addition of a phosphate molecule to the protein at one spot and addition of an ubiquitin molecule at another point. Each time the message of the gene is transcribed into a protein, another ubiquitin molecule is chained on. Five ubiquitins in the chain and the protein is automatically destroyed.A counter on a separate work tape: neat !

Main article at sciencedaily; link via Science and Reason.

Labels:

miscellaneous,

outreach

Wednesday, July 04, 2007

FOCS 07 list out

The list is here. As I was instructed by my source, let the annual "roasting of the PC members" begin !

63 papers were accepted, and on a first look there appears to be a nice mix of topics: it doesn't seem as if any one area stands out. Not many papers are online though, from my cursory random sample, so any informed commenting on the papers will have to wait. People who know more about any of the papers are free to comment (even if you're the author!). Does anyone know the number of submissions this year ? I heard it was quite high.

63 papers were accepted, and on a first look there appears to be a nice mix of topics: it doesn't seem as if any one area stands out. Not many papers are online though, from my cursory random sample, so any informed commenting on the papers will have to wait. People who know more about any of the papers are free to comment (even if you're the author!). Does anyone know the number of submissions this year ? I heard it was quite high.

What's an advanced algorithm ?

And he's back !!!

After a rather refreshing (if overly hot) vacation in India, I'm back in town, forced to face reality, an impending move, and the beginning of semester. Yep, the summer is drawing to a close, and I haven't even stuffed my face with hot dogs yet, let alone 59.5 of them (is there something slightly deranged about ESPN covering "competitive eating" as a sport?).

Anyhow, I digress. The burning question of the day is this:

Some explanation is in order. At most schools, undergrads in CS take some kind of algorithms class, which for the sake of convenience we'll call the CLRS class (which is not to say that it can't be a KT class, or a DPV class, or a GT class, or....). At any rate, this is the first substantial algorithms class that the students take, and often it's the last one.

Now when you get into grad school, you often have to complete some kind of algorithms breadth requirement. In some schools, you can do this via an exam, and in others, you have to take a class. Namely, a graduate algorithms class. Note also that some students taking this class might not have taken an undergrad algorithms class (for example if they were math/physics majors)

What does one teach in such a class ? There are a number of conflicting goals here:

All of this elides the most important point though: what constitutes advanced material ? I've seen graduate students struggle with NP-hardness, but this is covered in almost any undergraduate algorithms class (or should). What about general technique classes like approximation algorithms and randomization, both of which merit classes in their own right and can only be superficially covered in any algorithms class (the same is true for geometry) ? Advanced data structures can span an entire course, and some of them are quite entertaining. Topics like FFTs, number-theoretic algorithms, (string) pattern matching, and flows also seem to fit into the purview of advanced topics, though they don't cohere as well with each other.

It seems like an advanced algorithms class (or the advanced portion of a graduate algorithms class) is a strange and ill-defined beast, defined at the mercy of the instructor (i.e ME !). This problem occurs in computational geometry as well; to use graph-theoretic parlance, the tree of topics fans out very quickly after a short path from the root, rather than having a long and well-defined trunk.

After a rather refreshing (if overly hot) vacation in India, I'm back in town, forced to face reality, an impending move, and the beginning of semester. Yep, the summer is drawing to a close, and I haven't even stuffed my face with hot dogs yet, let alone 59.5 of them (is there something slightly deranged about ESPN covering "competitive eating" as a sport?).

Anyhow, I digress. The burning question of the day is this:

What is an advanced algorithm ?It might not be your burning question of the day, but it certainly is mine, because I am the proud teacher of the graduate algorithms class this fall.

Some explanation is in order. At most schools, undergrads in CS take some kind of algorithms class, which for the sake of convenience we'll call the CLRS class (which is not to say that it can't be a KT class, or a DPV class, or a GT class, or....). At any rate, this is the first substantial algorithms class that the students take, and often it's the last one.

Now when you get into grad school, you often have to complete some kind of algorithms breadth requirement. In some schools, you can do this via an exam, and in others, you have to take a class. Namely, a graduate algorithms class. Note also that some students taking this class might not have taken an undergrad algorithms class (for example if they were math/physics majors)

What does one teach in such a class ? There are a number of conflicting goals here:

- Advertising: attract new students to work with you via the class. Obviously, you'd like to test them out first a little (this is not the only way of doing this)

- Pedagogy: there's lots of things that someone might need even if they don't do algorithms research: topics like approximations and randomization are not usually well covered in an intro algorithms class

- Retaining mindshare: you don't want to teach graduate algorithms as a CLRS class because you'll bore all the people who took such a class. On the other hand, you zoom too far ahead, and you lose the people who come to CS from different backgrounds. And the Lord knows we're desperate nowadays for people to come to CS from different backgrounds (more on this in a later post).

- Teach to the top: dive straight into advanced topics; anyone who needs to catch up does so on their own time. This is probably the most appealing solution from a lecturer perspective, because it covers more interesting topics. It's also most likely to excite the few students who care. Be prepared for bumpy student evals, especially from disgruntled folks who just need a grade so that they can forget all about algorithms for the rest of their Ph.D

- Teach to the bottom: Do a CLRS-redux, with maybe a few advanced topics thrown in at the end (NP-hardness might count as advanced). You'll bore the good ones, but you'll bring the entire class along.

All of this elides the most important point though: what constitutes advanced material ? I've seen graduate students struggle with NP-hardness, but this is covered in almost any undergraduate algorithms class (or should). What about general technique classes like approximation algorithms and randomization, both of which merit classes in their own right and can only be superficially covered in any algorithms class (the same is true for geometry) ? Advanced data structures can span an entire course, and some of them are quite entertaining. Topics like FFTs, number-theoretic algorithms, (string) pattern matching, and flows also seem to fit into the purview of advanced topics, though they don't cohere as well with each other.

It seems like an advanced algorithms class (or the advanced portion of a graduate algorithms class) is a strange and ill-defined beast, defined at the mercy of the instructor (i.e ME !). This problem occurs in computational geometry as well; to use graph-theoretic parlance, the tree of topics fans out very quickly after a short path from the root, rather than having a long and well-defined trunk.

Friday, June 08, 2007

My Biased Coin: A New Blog !!

It's been a while since I've been able to announce a new CS blog. Please point your bookmarks/RSS newsreaders/browsers to Michael Mitzenmacher's new blog, My Biased Coin. Michael has occasionally posted over here, and has often had comments and suggestions for me. When he's not brokering peace agreements between power law distributions and log normal distributions, Michael corrupts young grad students at Harvard for a living, filling their minds with all kinds of random bits that are occasionally error corrected.

Labels:

blogosphere,

community

SoCG 2007: CUP takes over all of geometry...

The good news from today is that the Demaine-O'Rourke book on folding and linkages is finally out. Erik had a copy of the book at the conference, and it's a handsome volume that will hopefully soon reside on my bookshelf.

The authors have a web page associated with the book, and it has a number of applets that go along with constructions from the text.

The publisher of this book is Cambridge University Press, which is great, because I love their fonts :). CUP clearly has a plan for domination of the entire computational geometry catalog: along with the folding book, they are publishing:

The authors have a web page associated with the book, and it has a number of applets that go along with constructions from the text.

The publisher of this book is Cambridge University Press, which is great, because I love their fonts :). CUP clearly has a plan for domination of the entire computational geometry catalog: along with the folding book, they are publishing:

- Tamal Dey's book on curve and surface reconstruction.

- Subir Ghosh's new book on visibility algorithms in the plane.

- Narasimhan and Smid's book on geometric spanners.

Labels:

community,

miscellaneous,

socg

Wednesday, June 06, 2007

SoCG 2007: Approximate Clustering

I was listening to a couple of talks that improve known bounds for various kinds of approximate clustering in high dimensions, and I got to thinking.

One of the revolutions in geometry over the last 10 years has been the development of nontrivial tools for dealing with approximations in high dimensions. This is of course necessitated by the curse of dimensionality, and the hardness of most high-D data analysis problems (most exact solutions are exponential in the dimension). So rather than computing the optimal solution to some problem on n points in d dimensions, we compute a (1+e)-approximation to the optimal solution.

One problem this creates is that every algorithm is now described by a complicated expression involving three parameters (n, d, e). Some algorithms are exponential in 1/e, but polynomial in d. Some are poly in 1/e, and exponential in d. The exponent could have a base of n, or 2, or even d, or 1/e.

In short, it's an unholy mess of strictly incomparable methods that tradeoff different parameters against each other.

This makes life for me, the "user" of approximation technology, rather difficult. What I'd really like to understand are the gadgets that transform one kind of bound to another (and there are many such gadgets: discretization, enumeration, random sampling, etc). But it's hard to gather these from the papers directly: these gadgets (the really useful tools) are buried deep inside lemmas, and inside people's heads.

What I'd like to see is some kind of "taxonomization" or classification of the different "tricks of the trade" in high-dimensional geometric approximation, with some sense of which techniques apply when, and why. In fact, I like this idea so much I might even try to run a seminar on it..

One of the revolutions in geometry over the last 10 years has been the development of nontrivial tools for dealing with approximations in high dimensions. This is of course necessitated by the curse of dimensionality, and the hardness of most high-D data analysis problems (most exact solutions are exponential in the dimension). So rather than computing the optimal solution to some problem on n points in d dimensions, we compute a (1+e)-approximation to the optimal solution.

One problem this creates is that every algorithm is now described by a complicated expression involving three parameters (n, d, e). Some algorithms are exponential in 1/e, but polynomial in d. Some are poly in 1/e, and exponential in d. The exponent could have a base of n, or 2, or even d, or 1/e.

In short, it's an unholy mess of strictly incomparable methods that tradeoff different parameters against each other.

This makes life for me, the "user" of approximation technology, rather difficult. What I'd really like to understand are the gadgets that transform one kind of bound to another (and there are many such gadgets: discretization, enumeration, random sampling, etc). But it's hard to gather these from the papers directly: these gadgets (the really useful tools) are buried deep inside lemmas, and inside people's heads.

What I'd like to see is some kind of "taxonomization" or classification of the different "tricks of the trade" in high-dimensional geometric approximation, with some sense of which techniques apply when, and why. In fact, I like this idea so much I might even try to run a seminar on it..

SoCG 2007: "Magic Hausdorff Lions Have Happy Endings"

(It's at the point now where people complain if the business meeting post is NOT up 5 minutes after the actual meeting. Sigh...)

Summary for people in a hurry:

Local News:

Otfriend Cheong was in charge of local arrangements. I have to say that the hotel we are at is quite amazing: It's on the Bomun Lake, and right outside the hotel is this lake-side path that leads to all the restaurants one can imagine, a tourist village with acres of motorized scooters (!), and some not-disgusting coffee. The hotel facilities themselves are excellent (again, modulo the coffee); much better for a cheaper price in comparison to a US Hotel. And as I sit here writing this, I can hear the rehearsals for our Korean classical music concert tonight.

Unfortunately, there was no one here to enjoy it ! Attendance is down tremendously (111 people), a factor exacerbated by FCRC, being held in a day or two in the exotic locale of San Diego (fish tacos ! blech!), and other related conferences. The relative remoteness of Gyeongju played no small role in this, I imagine.

There was much discussion about whether we should continue to be sponsored by ACM or not (the main issue is cost, and logistics when organizing in a non-US location); no resolution on this point.

PC Stuff: (obvious caveat: I was on the PC this year)

Jeff Erickson presented the usual stats (45/139 papers accepted, out of 286 submitting authors, and with 115+ external reviews). It turns out that the formula for succes at SoCG this year involves

Lots of other stats, nothing particularly enlightening.

The Near Future.

Monique Teillaud will chair the SoCG 2008 program committee. The committee has 17 people on it, an unusually large number. She's hoping to get 4 reviews per paper, so maybe that's why.

David Mount explained why we had to move from Alexandria, Virginia to UMD for SoCG 2008. Apparently the main hotel we would have used was bought out and is now much more expensive. Boo hoo. On the bright side, UMD will be a lot cheaper in terms of accomodation.

SoCG 2009.

We had two competing bids for SoCG 2009. Aarhus (Beer ! Lego ! Beer ! City life ! Beer!) and Portland (Roses ! Beer ! More Roses ! Powell's Books ! Microbreweries!).

No, we are not a bunch of boozing alcoholics.

Lars did a bang up job with his Aarhus presentation. John Hershberger did a great presentation on Portland (a great city to visit, btw), but it was no contest. By a 4-1 margin, Aarhus it is !

Open Discussion.

It was getting rather late by the time we got to open discussions. Guenter Rote initiated the question, "Should we have a rebuttal process for reviewing papers", in response to apparently some aggrieved set of rejected authors.

We had a heated discussion on this point, and although we ultimately went nowhere, it was felt that we should continue things electronically (i.e on blogs). I'll post at greater length on this issue later, but to summarize the main points pro and con:

Pro:

Summary for people in a hurry:

- Attendance this year was a record low

- Next PC Chair: Monique Teillaud

- SoCG 2008 will be at the University of Maryland (rather than Virginia: long story)

- SoCG 2009 (by a 4-1 margin) will be in Aarhus, Denmark. BEER !!!!!!!!!

Local News:

Otfriend Cheong was in charge of local arrangements. I have to say that the hotel we are at is quite amazing: It's on the Bomun Lake, and right outside the hotel is this lake-side path that leads to all the restaurants one can imagine, a tourist village with acres of motorized scooters (!), and some not-disgusting coffee. The hotel facilities themselves are excellent (again, modulo the coffee); much better for a cheaper price in comparison to a US Hotel. And as I sit here writing this, I can hear the rehearsals for our Korean classical music concert tonight.

Unfortunately, there was no one here to enjoy it ! Attendance is down tremendously (111 people), a factor exacerbated by FCRC, being held in a day or two in the exotic locale of San Diego (fish tacos ! blech!), and other related conferences. The relative remoteness of Gyeongju played no small role in this, I imagine.

There was much discussion about whether we should continue to be sponsored by ACM or not (the main issue is cost, and logistics when organizing in a non-US location); no resolution on this point.

PC Stuff: (obvious caveat: I was on the PC this year)

Jeff Erickson presented the usual stats (45/139 papers accepted, out of 286 submitting authors, and with 115+ external reviews). It turns out that the formula for succes at SoCG this year involves

submitting seven papers, with 4 co-authors, from an email address in Pakistan.The title of this post was composed from words in accepted papers.

Lots of other stats, nothing particularly enlightening.

The Near Future.

Monique Teillaud will chair the SoCG 2008 program committee. The committee has 17 people on it, an unusually large number. She's hoping to get 4 reviews per paper, so maybe that's why.

David Mount explained why we had to move from Alexandria, Virginia to UMD for SoCG 2008. Apparently the main hotel we would have used was bought out and is now much more expensive. Boo hoo. On the bright side, UMD will be a lot cheaper in terms of accomodation.

SoCG 2009.

We had two competing bids for SoCG 2009. Aarhus (Beer ! Lego ! Beer ! City life ! Beer!) and Portland (Roses ! Beer ! More Roses ! Powell's Books ! Microbreweries!).

No, we are not a bunch of boozing alcoholics.

Lars did a bang up job with his Aarhus presentation. John Hershberger did a great presentation on Portland (a great city to visit, btw), but it was no contest. By a 4-1 margin, Aarhus it is !

Open Discussion.

It was getting rather late by the time we got to open discussions. Guenter Rote initiated the question, "Should we have a rebuttal process for reviewing papers", in response to apparently some aggrieved set of rejected authors.

We had a heated discussion on this point, and although we ultimately went nowhere, it was felt that we should continue things electronically (i.e on blogs). I'll post at greater length on this issue later, but to summarize the main points pro and con:

Pro:

- Creates an improved sense of fairness

- A safety net for potential errors

- Time waste for reviewers

- Time waste for authors (!) (according to Jack Snoeyink), but I am not convinced that this is a valid argument

- Won't make a significant difference

SoCG 2007: Geometric Views of Learning

I've been fairly remiss in my SoCG blogging; I'll blame it on having session chair duties, and not wanting to lug my laptop around.

The invited talk was by Partha Niyogi from the University of Chicago on 'A Geometric Perspective on Machine Learning'. You may remember his work from my earlier post on the estimation of the surface area of a convex body (read the comments). More importantly, he is part of the group that developed a method known as Laplacian Eigenmaps for learning a manifold from a collection of data.

Manifold learning is a new set of problems in machine learning that has interesting connections to algorithms and geometry. The basic problem is as follows. Given a collection of (unlabelled) data inhabiting some high dimensional space, can you determine whether they actually lie on some lower dimensional manifold in this space ?

Why do we care ? The problem with any data analysis problem in high dimensionality is the rather poetically named, 'curse of dimensionality' which basically says that any interesting data analysis algorithm runs in time exponential in the dimension of the data. For data that lives in 100s of dimensions, this is rather bad news.

However, "data that lives in 100 dimensions" is really an artifact of the way we represent data, slapping on dimensions willy-nilly for every attribute that might be relevant. What one often expects is that data doesn't really lie in 100 dimensions, but in some lower dimensional manifold of it. A beautiful example of this was given by Partha in his talk: he described the problem of inferring a sound wave signal generated at one of a tube by listening in at the other hand. By Fourier analysis, you can think of both signals as living in an infinite dimensional space, but suppose we now vary the tube length, for a fixed input signal. Then the output signal varies smoothly along a curve (i.e a 1-d manifold) in this infinite dimensional space.

"So what ?" one might ask. The problem is that the standard method of doing data analysis is to translate the problem of interest into some property of the distances between points in the high dimensional space. If the data really lies on some kind of curved surface, then the "distance in ambient space" does not reflect the true structure of the data. What we really need is "distance along the manifold", which we could do if we could reconstruct the manifold !

The key idea of the Laplacian Eigenmaps work is this: If you set up an appropriate weighted graph on the data points (where each edge has a weight that is exponentially related to the distance between the points) and compute the Laplacian of this graph, you get a approximation that converges (as the data size increases) to the Laplacian of the underlying manifold !! This assumes that that the data was sampled uniformly (or mostly uniformly) from the manifold. Moreover, the convergence happens at a rate that depends only on the dimension of the manifold, rather than the dimension of ambient space.

There are many ramifications of this idea, that connect to shape modelling, homology, and even volume estimation. But it reinforces the idea of the Laplacian as a key geometric construct that can be modelled combinatorially in a consistent way.

The invited talk was by Partha Niyogi from the University of Chicago on 'A Geometric Perspective on Machine Learning'. You may remember his work from my earlier post on the estimation of the surface area of a convex body (read the comments). More importantly, he is part of the group that developed a method known as Laplacian Eigenmaps for learning a manifold from a collection of data.

Manifold learning is a new set of problems in machine learning that has interesting connections to algorithms and geometry. The basic problem is as follows. Given a collection of (unlabelled) data inhabiting some high dimensional space, can you determine whether they actually lie on some lower dimensional manifold in this space ?

Why do we care ? The problem with any data analysis problem in high dimensionality is the rather poetically named, 'curse of dimensionality' which basically says that any interesting data analysis algorithm runs in time exponential in the dimension of the data. For data that lives in 100s of dimensions, this is rather bad news.

However, "data that lives in 100 dimensions" is really an artifact of the way we represent data, slapping on dimensions willy-nilly for every attribute that might be relevant. What one often expects is that data doesn't really lie in 100 dimensions, but in some lower dimensional manifold of it. A beautiful example of this was given by Partha in his talk: he described the problem of inferring a sound wave signal generated at one of a tube by listening in at the other hand. By Fourier analysis, you can think of both signals as living in an infinite dimensional space, but suppose we now vary the tube length, for a fixed input signal. Then the output signal varies smoothly along a curve (i.e a 1-d manifold) in this infinite dimensional space.

"So what ?" one might ask. The problem is that the standard method of doing data analysis is to translate the problem of interest into some property of the distances between points in the high dimensional space. If the data really lies on some kind of curved surface, then the "distance in ambient space" does not reflect the true structure of the data. What we really need is "distance along the manifold", which we could do if we could reconstruct the manifold !

The key idea of the Laplacian Eigenmaps work is this: If you set up an appropriate weighted graph on the data points (where each edge has a weight that is exponentially related to the distance between the points) and compute the Laplacian of this graph, you get a approximation that converges (as the data size increases) to the Laplacian of the underlying manifold !! This assumes that that the data was sampled uniformly (or mostly uniformly) from the manifold. Moreover, the convergence happens at a rate that depends only on the dimension of the manifold, rather than the dimension of ambient space.

There are many ramifications of this idea, that connect to shape modelling, homology, and even volume estimation. But it reinforces the idea of the Laplacian as a key geometric construct that can be modelled combinatorially in a consistent way.

Sunday, June 03, 2007

9th Carnival of Mathematics

From A to Z, at JD2718.

Monday, May 21, 2007

Creating a new publication model

I like to gripe about our current conference/journal system, and how ungainly a method it is for publishing and disseminating quality work. Not surprisingly, other areas of computer science appear to have similar issues as well.

Hal Daume, my colleage at the U. of U., has sparked off a rather interesting discussion with his post on NLPers about the need for a new open-access journal for NLP research. The original motivation for his post is partly NLP-specific, but the evolving discussion over at his wiki provides a closeup view of the process of designing a new publication model that sits somewhere between traditional journals and traditional conference.

It's too early to tell what will come of this, but if their model gains some traction, it might provide a good exemplar for future reform in other disciplines in computer science.

Hal Daume, my colleage at the U. of U., has sparked off a rather interesting discussion with his post on NLPers about the need for a new open-access journal for NLP research. The original motivation for his post is partly NLP-specific, but the evolving discussion over at his wiki provides a closeup view of the process of designing a new publication model that sits somewhere between traditional journals and traditional conference.

It's too early to tell what will come of this, but if their model gains some traction, it might provide a good exemplar for future reform in other disciplines in computer science.

Labels:

community,

publishing

Sunday, May 20, 2007

Numb3rs wins NSF award

I used to blog every episode of Numb3rs in its first season, and got bored of doing this soon after. However, I've continued to watch the show; I don't have the heart to avoid watching a show that involves mathematics (and more often than not, computer science). It's been surprisingly decent in its three years, as long as you're willing to concede all kinds of miraculous data mining feats performed in the span of a few hours.

However, I always wondered if it could make it on a mainstream network for very long. After all, ratings are king, and how long could such a tech-heavy series last ? After all, the crime procedural aspects of the show are hardly unique, and with the monster success of CSI, even more scientifically oriented crime series were clogging up the airwaves.

Thus, I was pleasantly surprised to hear that Numb3rs is now the "most-watched show" on Friday nights, clocking in at around 12 million viewers. It's been picked up for a fourth season, and ended season three with a dramatic moment, as well as interesting existential angst for the main characters. In fact, I suspect one of the reasons Numb3rs has done so well is that they've done a fairly realistic job of portraying some of tensions inherent in the life of the researcher (even if the mathematics itself, and the way the characters talk about it, is still somewhat cringeworthy).

The NSF (or technically, the NSB, the board that oversees the NSF) has recognized the success of Numb3rs as well. The show and its creaters were just given a public service award for

However, I always wondered if it could make it on a mainstream network for very long. After all, ratings are king, and how long could such a tech-heavy series last ? After all, the crime procedural aspects of the show are hardly unique, and with the monster success of CSI, even more scientifically oriented crime series were clogging up the airwaves.

Thus, I was pleasantly surprised to hear that Numb3rs is now the "most-watched show" on Friday nights, clocking in at around 12 million viewers. It's been picked up for a fourth season, and ended season three with a dramatic moment, as well as interesting existential angst for the main characters. In fact, I suspect one of the reasons Numb3rs has done so well is that they've done a fairly realistic job of portraying some of tensions inherent in the life of the researcher (even if the mathematics itself, and the way the characters talk about it, is still somewhat cringeworthy).

The NSF (or technically, the NSB, the board that oversees the NSF) has recognized the success of Numb3rs as well. The show and its creaters were just given a public service award for

...extraordinary contributions to increase public understanding of science. Recipients are chosen for their contributions to public service in areas such as: increasing the public's understanding of the scientific process and its communication; contributing to the development of broad science and engineering policy; promoting the engagement of scientists and engineers in public outreach; and fostering awareness of science and technology among broad segments of the population.

Friday, May 18, 2007

8th carnival of mathematics

It's back-to-school night at the carnival of mathematics. Time to revisit, reflect, and ponder on things we think we already know.

Estraven at Proving Theorems wishes that mathematicians today spent more time revisiting and bring up to date older, more inaccessible works of mathematics. This is discussed in the context of a re-publication of some of Grothendieck's early work on schemes.

There's a lovely quote at the end of the article, one that I cannot but help share:

Burnside's Lemma ultimately deals with counting symmetries: Ian Stewart has a new book out on the history of symmetry titled, "Why Beauty is Truth: A History of Symmetry". In a post at the Brittanica blogs, he describes why he decided to write this book.

In an ongoing series, John Amstrong continues his unapologetic look at coloring knots. I'd say any topic that has phrases like 'involuntary quandle' is worth studying. Of course, the original tying-yourself-in-knots proof was the diagonalization method of Cantor, which Mark Chu-Carroll is kind enough to explain to us, while he takes a timeout from beating errant creationists with sticks of logic. Mark notes that he's been going back to some old books on Set theory and is thoroughly enjoying them, another example of the theme of the day :)

Godel's incompletenes theorem is another of the tying-yourself-in-knots variety, and this year's Godel prize winner is a result in the same vein, showing why certain "natural proof structures" cannot be used to prove P != NP. Siva and Bill Gasarch have the scoop.

Walt at Ars Mathematica catches up to the Bulletin of the AMS, highlighting some nice expository articles that should make Estraven happy.

It's time to get educational now. Dave Marain helps high school math teachers everywhere with lesson plans for teaching quadratic systems and tangents without calculus. Mikael Johansson shows bravery far above and beyond the call of duty, explaining fundamental groupoids to a group of 9th graders. Heck, I don't even understand what a groupoid is, and I read John Baez all the time !

jd2718 shows his students how Fermat is connected to them. It would be remiss of me not to give a shout out to the Mathematics Genealogy Project. We've all heard the horror stories about the students who write "dy/dx = y/x"; Dan Greene at The Exponential Curve talks of students who write "log 5/log 2 = 5/2" and wonders if we need to change the notation for log to something more "mathematical".

I am delighted, and surprised, to see the quality of reports produced in the undergraduate

automata class that Leo Kontorovich is TAing. Read more about what his students did here. Andy Drucker weaves tales (tails?) of dogs eating steak in mineshafts, and cats climbing GMO-compromised trees, to explain some of the subtler details of computability theory.

We're almost ready to wrap up this edition of the carnival. Chew on some simple brainteasers that will excercise regions of your brain that you may not have known existed ! And review the history of calculating devices, with some speculation on what future calculators might look like.

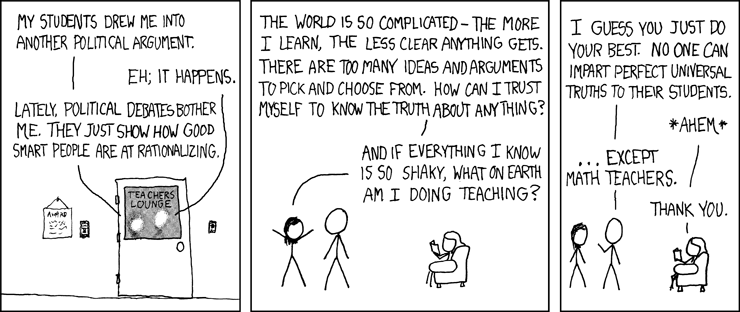

Finally, no carnival of mathematics would be complete without a cartoon by XKCD:

And that's all, folks ! Keep those posts coming. The next Carnival of Mathematics is in two weeks, hosted by JD2718.

Estraven at Proving Theorems wishes that mathematicians today spent more time revisiting and bring up to date older, more inaccessible works of mathematics. This is discussed in the context of a re-publication of some of Grothendieck's early work on schemes.

There's a lovely quote at the end of the article, one that I cannot but help share:

I could of course keep writing paper after paper. But there is so much beautiful mathematics out there that I don't know yet, and I want to read at least some of it before I dieI remember first sensing beauty when I first learnt Burnside's Lemma: Leadhyena Inrandomtan at Viviomancy has a detailed post on this simple, and yet elegant result in combinatorics.

Burnside's Lemma ultimately deals with counting symmetries: Ian Stewart has a new book out on the history of symmetry titled, "Why Beauty is Truth: A History of Symmetry". In a post at the Brittanica blogs, he describes why he decided to write this book.

In an ongoing series, John Amstrong continues his unapologetic look at coloring knots. I'd say any topic that has phrases like 'involuntary quandle' is worth studying. Of course, the original tying-yourself-in-knots proof was the diagonalization method of Cantor, which Mark Chu-Carroll is kind enough to explain to us, while he takes a timeout from beating errant creationists with sticks of logic. Mark notes that he's been going back to some old books on Set theory and is thoroughly enjoying them, another example of the theme of the day :)

Godel's incompletenes theorem is another of the tying-yourself-in-knots variety, and this year's Godel prize winner is a result in the same vein, showing why certain "natural proof structures" cannot be used to prove P != NP. Siva and Bill Gasarch have the scoop.

Walt at Ars Mathematica catches up to the Bulletin of the AMS, highlighting some nice expository articles that should make Estraven happy.

It's time to get educational now. Dave Marain helps high school math teachers everywhere with lesson plans for teaching quadratic systems and tangents without calculus. Mikael Johansson shows bravery far above and beyond the call of duty, explaining fundamental groupoids to a group of 9th graders. Heck, I don't even understand what a groupoid is, and I read John Baez all the time !

jd2718 shows his students how Fermat is connected to them. It would be remiss of me not to give a shout out to the Mathematics Genealogy Project. We've all heard the horror stories about the students who write "dy/dx = y/x"; Dan Greene at The Exponential Curve talks of students who write "log 5/log 2 = 5/2" and wonders if we need to change the notation for log to something more "mathematical".

I am delighted, and surprised, to see the quality of reports produced in the undergraduate

automata class that Leo Kontorovich is TAing. Read more about what his students did here. Andy Drucker weaves tales (tails?) of dogs eating steak in mineshafts, and cats climbing GMO-compromised trees, to explain some of the subtler details of computability theory.

We're almost ready to wrap up this edition of the carnival. Chew on some simple brainteasers that will excercise regions of your brain that you may not have known existed ! And review the history of calculating devices, with some speculation on what future calculators might look like.

Finally, no carnival of mathematics would be complete without a cartoon by XKCD:

And that's all, folks ! Keep those posts coming. The next Carnival of Mathematics is in two weeks, hosted by JD2718.

Subscribe to:

Posts (Atom)